manipulation with mobile robots

DISSERTATION

submitted in partialfulllment

of the requirements forthe degree

Doktor Ingenieur

(Dotor of Engineering)

inthe

Faulty of EletrialEngineering and InformationTehnology

atTU Dortmund University

by

Dipl.-Ing. Thomas Nierobish

Shwäbish Gmünd, Germany

Date of submission: 21th January2014

First examiner: Univ.-Prof. Dr.-Ing. Prof. h.. Dr. h.. Torsten Bertram

Seond examiner: Univ.-Prof. Dr.-Ing. Bernd Tibken

Date of approval: 22th June 2015

frontier, it is exiting and disorganised; there is often no reliable

authority to appeal to - many useful ideas have no theoretial

grounding, and some theories are useless in pratie."

Forsyth and Pone

Authors fromComputer Vision: A Modern Approah

Inthe future, autonomousservierobots are supposed toremovethe burden of monotoni

and tedioustasks like pikup and delivery from people. Vision being the most important

human sensor and feedbak system is onsidered to play a prominent role in the future

of robotis. Robust tehniques for visual robot navigation, objet reognition and vision

assisted objet manipulation are essential in servie robotis tasks. Mobile manipulation

in servie robotis appliations requires the alignment of the end-eetor with reognized

objets of unknown pose. Image based visual servoing provides a means of model-free

manipulationof objetssolely relying on2D image information.

In this thesis ontributions to the eld of deoupled visual servoing for objet manipula-

tion as well as navigation are presented. A novel approah for large view visual servoing

of mobilerobots is presented by deoupling the gaze and navigation ontrol via a virtual

amera plane, whih enables the visual ontroller to use the same naturallandmarks e-

iently over a largerange of motion. In order toomplete the repertoire of reative visual

behaviors an innovative door passing behavior and an obstale avoidane behavior using

omnivision are designed. The developed visual behaviors represent a signiant step to-

wards the model-free visual navigation paradigm relying solely on visual pereption. A

novelapproahfor visualservoing based onaugmented image featuresis presented, whih

hasonlyfouro-diagonalouplingsbetweenthevisualmomentsandthedegreesofmotion.

As the visual servoing relies on unique image features, objet reognition and pose align-

mentof the manipulator relyonthe same representation of the objet. Inmany senarios

the features extrated in the referene pose are only pereivable aross a limited region

of the work spae. This neessitates the introdutionof additional intermediate referene

views of the objet and requires path planning in view spae. In this thesis a model-free

approah for optimal large view visual servoing by swithing between referene views in

order tominimizethe time toonvergene is presented.

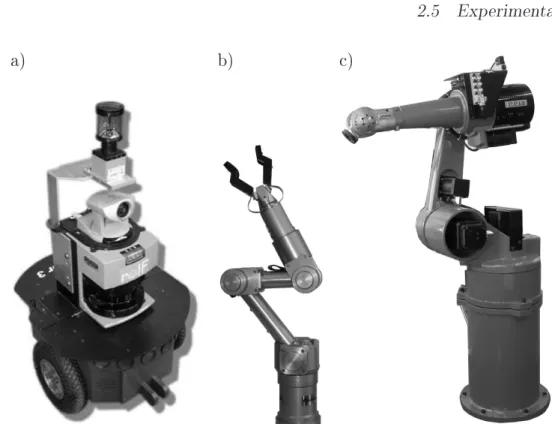

The eieny and robustness of the proposed visual ontrolshemes are evaluated inthe

virtualrealityandontherealmobileplatformaswellasontwodierentmanipulators. The

experimentsareperformedsuessfullyindierentsenariosinrealistioeenvironments

withoutanyprior struturing. Therefore thisthesis presentsamajor ontributiontowards

visionas the universal sensor for mobile manipulation.

Autonome Servieroboter sollen in Zukunft dem Menshen monotone und körperlih an-

strengendeAufgaben abnehmen,indemsiebeispielsweiseHol-undBringedienste ausüben.

Visuelle Wahrnehmung ist das wihtigste menshlihe Sinnesorgan und Rükkopplungs-

systemundwirddahereineherausragendeRolleinzukünftigenRobotikanwendungenspie-

len. Robuste Verfahren für bildbasierte Navigation, Objekterkennung und Manipulation

sind essentiell für Anwendungen in der Servierobotik. Die mobile Manipulation in der

Servierobotik erfordert die Ausrihtung des Endeektors zu erkannten Objekten in un-

bekannterLage. DiebildbasierteRegelungermöglihteinemodellfreieObjektmanipulation

allein durh Berüksihtigung der zweidimensionalen Bildinformationen.

ImRahmendieserArbeitwerdenBeiträgezurentkoppeltenbildbasiertenRegelungsowohl

fürdieObjektmanipulationalsauhfürdieNavigationpräsentiert. EinneuartigerAnsatz

für die bildbasierte Weitbereihsregelung mobiler Roboter wird vorgestellt. Hierbei wer-

dendieBlikrihtungs-undNavigationsregelungdurheinevirtuelleKameraebeneentkop-

pelt, was es der bildbasierten Regelung ermögliht, dieselben natürlihen Landmarken ef-

zientübereinenweitenBewegungsbereihzuverwenden. UmdasRepertoiredervisuellen

Verhalten zu vervollständigen, werden ein innovatives Türdurhfahrtsverhalten sowie ein

HindernisvermeidungsverhaltenbasierendaufomnidirektionalerWahrnehmungentwikelt.

DieentworfenenvisuellenVerhaltenstelleneinenwihtigenShrittinRihtungdesParadig-

mas derreinenmodellfreienvisuellenNavigationdar. Einneuartiger Ansatzbasierend auf

BildmerkmalenmiteinererweitertenAnzahlvonAttributenwirdvorgestellt,dernaheiner

Entkopplung der Eingangsgröÿen nur vier unerwünshte Kopplungen zwishen den Bild-

momenten und den Bewegungsfreiheitsgraden aufweist. In vielen Anwendungsszenarien

sinddieextrahiertenReferenzmerkmalenurineinembegrenztenBereihdesArbeitsraums

sihtbar. Dieserfordert dieEinführungzusätzliher Zwishenansihten des Objektessowie

eine Pfadplanung im zweidimensionalen Bildraum. In dieser Arbeit wird deswegen eine

modellfreieMethodikfürdiezeitoptimalebildbasierteWeitbereihsregelungpräsentiert,in

der zwishen den einzelnen Referenzansihten umgeshaltet wird, um die Konvergenzzeit

zu minimieren.

DieEzienzundRobustheitdervorgeshlagenenbildbasiertenReglerwerdensowohlinder

virtuellenRealität alsauh auf der realenmobilen Plattformsowie zweiuntershiedlihen

Manipulatorenveriziert. DieExperimentewerdeninuntershiedlihenSzenarieninalltäg-

lihen Büroumgebungen ohne vorherige Strukturierung durhgeführt. Diese Arbeit stellt

einen wihtigenShritt hin zu visuellerWahrnehmung alseinzigerund universeller Sensor

für diemobile Manipulationdar.

1 Introdution 1

1.1 Mobile manipulation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.2 Relatedwork . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.3 Objetive of this thesis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

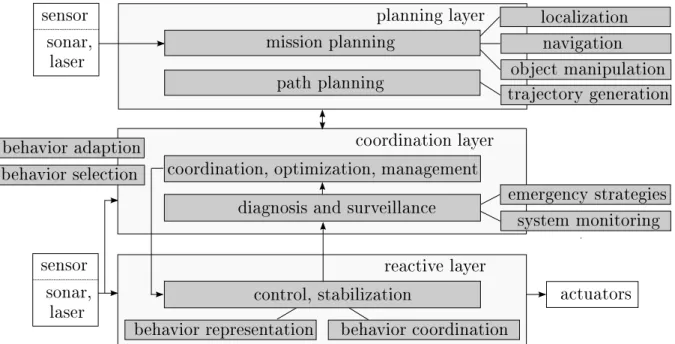

2 State of the art of omputer vision and visual servoing 11 2.1 Perspetive amera,multiple-viewgeometry and omnivision . . . . . . . . 11

2.2 Robustpointfeature detetion for reognition . . . . . . . . . . . . . . . . 14

2.3 Visualnavigation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

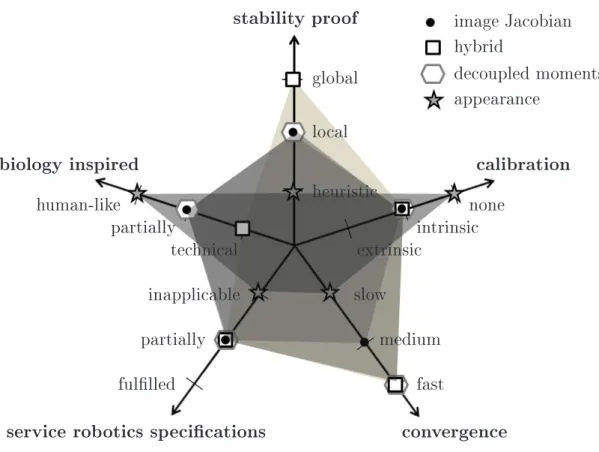

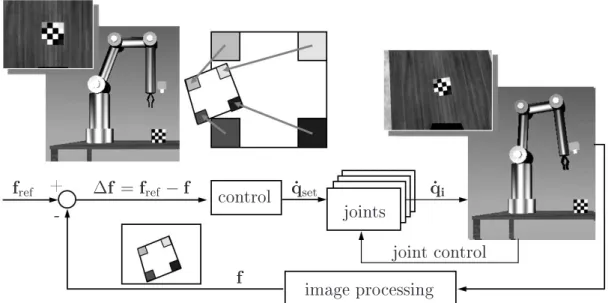

2.4 Image-based visualservoing . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2.5 Experimental systems for visualservoing, navigation and loalization . . . 27

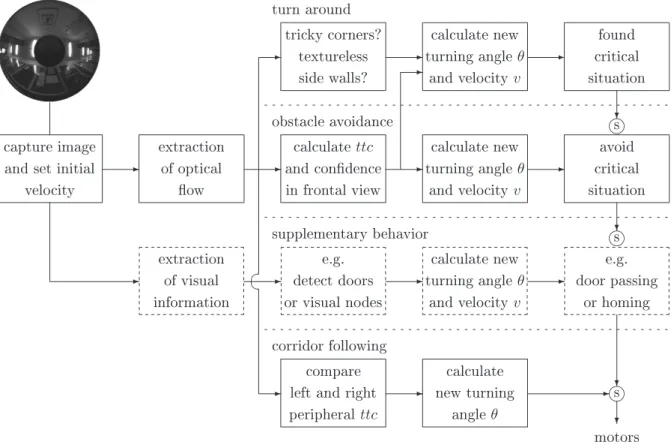

3 From vision guided to visual navigation of mobile robots 29 3.1 Vision-guidednavigation . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

3.1.1 Planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

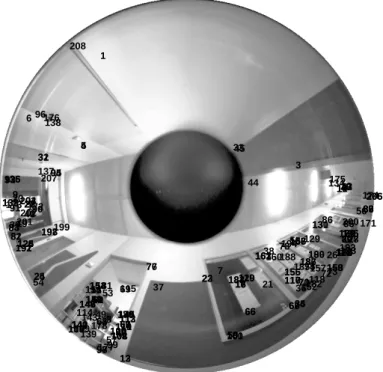

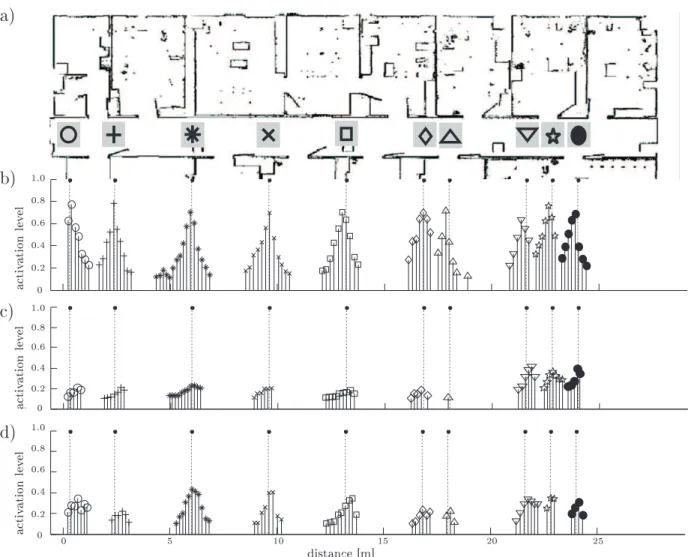

3.1.2 Topologial loalization. . . . . . . . . . . . . . . . . . . . . . . . . 31

3.2 Visualbehavior fordoor passing . . . . . . . . . . . . . . . . . . . . . . . . 34

3.3 Visualbehaviors forollision-freenavigation . . . . . . . . . . . . . . . . . 36

3.3.1 Corridorentering . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

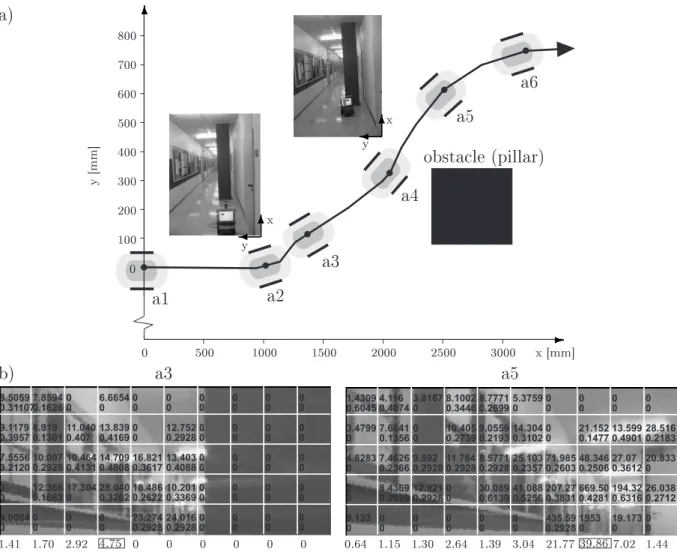

3.3.2 Obstale avoidane by optialow . . . . . . . . . . . . . . . . . . 36

4 Global visual homing by visual servoing 43

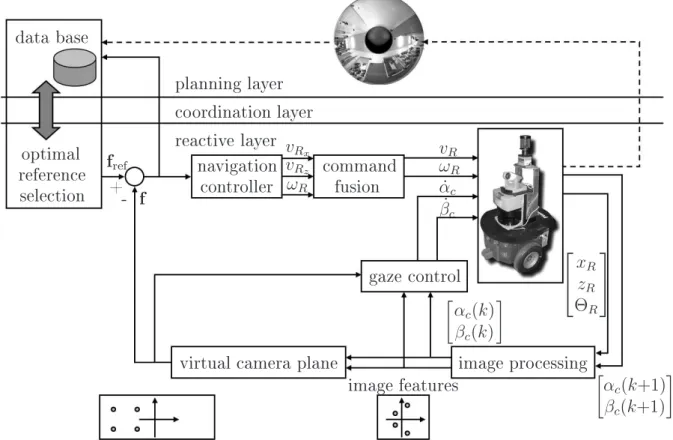

4.1 Generalonept . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

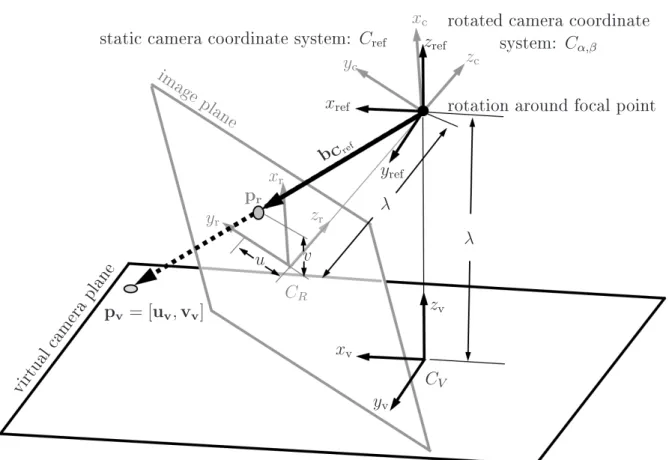

4.2 Virtual ameraplane . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

4.3 Cameragaze ontrol . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

4.4 Visualnavigation ontrol . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

4.4.1 Controlby image Jaobian . . . . . . . . . . . . . . . . . . . . . . . 51

4.4.2 Controlwith imagemomentsand primitivevisual behaviors . . . . 53

4.4.3 Controlwith homography . . . . . . . . . . . . . . . . . . . . . . . 56

4.4.4 Experimentalresults . . . . . . . . . . . . . . . . . . . . . . . . . . 56

4.5 Comparisonof vision guidedand visual navigation. . . . . . . . . . . . . . 60

5 Loal visual servoing with generi image moments 63 5.1 Augmented pointfeatures . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

5.2 Generimoments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

5.2.1 Moments for rotation . . . . . . . . . . . . . . . . . . . . . . . . . . 66

5.2.2 Moments for translation . . . . . . . . . . . . . . . . . . . . . . . . 68

5.2.3 Coupling analysis of the sensitivity matrix . . . . . . . . . . . . . . 73

5.3 Positioningin 4DOF with augmented point features . . . . . . . . . . . . 74

5.3.1 Controlleroptimization . . . . . . . . . . . . . . . . . . . . . . . . . 74

5.3.2 Simulationand experimentalresults . . . . . . . . . . . . . . . . . . 78

5.4 Positioningin simulationsin 6DOF with augmented point features . . . . 79

5.5 Alternative: Visualservoing on avirtual amera plane . . . . . . . . . . . 80

5.6 Analysisand onlusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

6.1 Stability analysis dependingon feature distribution . . . . . . . . . . . . . 88

6.2 Optimalreferene imageseletion . . . . . . . . . . . . . . . . . . . . . . . 91

6.2.1 Controlriteria . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

6.3 Navigation inthe imagespae . . . . . . . . . . . . . . . . . . . . . . . . . 94

6.4 Experimental results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

6.4.1 Navigation aross a spherewithin the virtual reality . . . . . . . . . 99

6.4.2 Navigation aross a semi ylinder with a5 DOFmanipulator . . . . 99

6.4.3 Navigation aross a uboid with a6 DOFmanipulator . . . . . . . 101

6.5 Alternative: Model-free pose estimation withloalvisualservoing . . . . . 103

6.6 Evaluationand onlusion . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

7 Conlusions and future work 111

A Analysis of the grid-based time to ontat from optial ow 115

B Analysis of the sensitivity matrix 119

Bibliography 123

Aknowledgements 138

The abbreviations used within the sope of this work are ordered alphabetially in the

following.

ARIA AdvanedRobotInterfae for Appliations

ARNL AdvanedRobotis Navigationand Loalizationsystem

a.u. arbitrary units

AUTOSAR AUTomotiveOpen SystemARhiteture

BRIEF Binary Robust Independent Elementary Features

CAD Computer-Aided Design

CMAES Controlled Model-AssistedEvolution Strategy

CV Current View

DBRVS Distane-Based Referene View Seletion

DOF Degree Of Freedom

DoG Dierene of Gaussian

EKF Extended KalmanFilter

FAST Features fromAelerated SegmentTest

FCRVS Fixed Convergene RefereneView Seletion

FSI Fixed Sale Interpolation

GFTT GoodFeatures ToTrak

GF-HOG GradientField-Histogramof Oriented Gradients

GLOH GradientLoationand OrientationHistogram

GV Goal View

HIL Hardware In the Loop

HOG Histogramof Oriented Gradients

IBVS Image-Based Visual Servoing

IR InfraRed

LQR Linear Quadrati Regulator

MAES Model-AssistedEvolution Strategy

NN Neural Network

ORB Oriented FAST and RotatedBRIEF

ORVS Optimal Referene View Seletion

PBVS Position-Based Visual Servoing

PD ProportionalDierential

PTZ Pan TiltZoom

RANSAC RANdom SAmpleConsensus algorithm

RMSE RootMean Square Error

ROS RobotOperating System

RV Referene View

SIFT Sale InvariantFeature Transformation

SII Sale InvariantInterpolation

SLAM Simultaneous LoalizationAnd Mapping

SNN Single NearestNeighbor

SURF Speeded Up Robust Features

ToF Time of Flight

tt time to ontat

VSLAM Visual SimultaneousLoalizationAndMapping

WANN Weighted Average among three NearestNeighbors

In the present work vetors and matries are printed in bold type. Vetors are hereby

displayed by minusule letters whereas matries are represented by apital letters, and

salars are expressed in itali style. The nomenlature is sorted as following: the rst

lassiationriterionislatin beforegreek letters,afterwards lower-asebeforeupper-ase

letters, and nallyboldbeforeitalitype.

a

ontrolation (for appearane based visualservoing)a

h saling fator (for homography)a

i, b

i distaneofaninterestpointtoitsappropriateepipolarlineorresponding to theu

- andv

-diretion, respetivelya

k pixel displaementa

m, b

m, c

m, d

m modelparameters for exponentialfuntionA Hesse matrix

α

rotation aroundthex

-axis (roll)α

a orretion fator forthe adaptive imageJaobianα

c, α ˙

c amera pan angle, respetively veloityα

ia, β

ia, γ

ia interior anglesα

u, α

v intrinsi amera parameter: saling fator depending onλ

and pixel di-mensions

bCref image features inthe refereneframe

β

rotation aroundthey

-axis(pith)β

c, β ˙

c amera tiltangle, respetivelyveloityc

performane riterionconf

avg mean of the ondene valuesconf

seg(i,j) ondene values ina windowwiththe rowand olumnposition(i, j)

ofthe ell

C, C

n, C

r absolute, normalizedand relativenumberoffeature orrespondenes be- tween the referene viewand the urrent imageC

ref, C

α,β, C

R stati and rotated amera oordinate systems, respetively, and amera oordinate system inthe imageplaneC

V virtual amera oordinatesystem, respetively virtual ameraplane CVii

-th refereneviewd

kp normalized keypoint desriptor of SIFT featuresd

distaneD

Dierene-of-Gaussian∆f

error between desired and atualfeature loations∆ ˆ f

total normalized summedfeature error∆f

γ orretion alongγ

of the averaged keypoint rotation∆f

ω, ∆f

ω predited motion of the image features ausedby∆Θ

R∆ϕ

feature error between referene and urrent distortion (amera retreatproblem)

∆Θ

R orientationaltask spae error∆x

lateral task spae error∆z

longitudinal taskspae error[e

1a, e

2a]

T epipoles from the atual image[ e

1ref, e

2ref]

T epipoles from the desiredviewE essentialmatrix desribing the epipolaronstraint

E(θ), ¯ E(φ), ¯ E(r) ¯

mean absolute errorin azimuth, elevation and radiusE

u, E

v entropy along theu

- andv

-axis, respetivelyε

residual error between model and data point (for error funtion of theM-estimator)

ε

d dissimilarity(residualerror)ε

γ estimation error for amerarotationη

1, η

2 tuning variablesf

urrent image features, stated depending on the ontext asf

i= [u

i, v

i]

for the

i

-thimage featurewith oordinatesu

i, v

i, inthe ontext ofSIFTfeatures as

f

i= [u

i, v

i, φ

i, σ

i]

with the additional attributes orienta-tion

φ

i and saleσ

i, also in the ontext of image moments asf = [f

α, f

β, f

γ, f

x, f

y, f

z]

f

ref referene image features, alsoused inthe ontext of image momentsf

α image moment for rotationaroundthex

-axisf

β image moment for rotationaroundthey

-axisf

γ image moment for rotationaroundthe optialaxisf

x image moment for translationalong thex

-axisf

y image moment for translationalong they

-axisf

z image moment for translationalong the amera axisf

zd image momentfor translation alongthe ameraaxis, alternativeexpres- sion via the distane between pointfeaturesF

ost funtionG Gaussian lter

γ

rotation aroundthez

-axis (yaw), respetively the optialamera axisγ

t angle between orientationof virtual amera planeand templateplaneγ

V angle between the virtual ameraplane and the orientationof the roboth

twie the distane between the parabola's vertex and the fous of anomnidiretionalamera

H

,Hˆ

homography, estimated homography by feature orrespondenesH

u(i)

relative frequeny of features ini

-tholumnH

v(i)

relative frequeny of features ini

-throwI urrent image, alsodenoted as

I(u, v, t)

in dependene of the pixeloor-dinates

u, v

and timet I

ref referene image[I

u, I

v]

T spatial intensity gradient inu

- andv

-diretion, respetivelyJ

visual imageJaobianJ

+ pseudoinverse of the imageJaobianJ

a Jaobian for appearane based visualservoingJ

e Jaobian for visualservoing on epipolesJ

vω separated Jaobian for rotationalmotionJ

vt separated Jaobian for translationalmotionJ

vξuξ separated Jaobian for angleand axisof rotation parametrizationJ

xz separated Jaobian for translational motion, redued to two degrees of freedomJ

dk robot Jaobianfor dierential kinematisJfi image Jaobian forthe imagemomentin

i

, whereasi

stands forx

,y

,z

,α

,β

,γ

J

fi,j image Jaobian entry for the image moment ini

with a movement inj

, whereas bothi

andj

stand forx

,y

,z

,α

,β

,γ

andi = j

(desiredouplings)

J ˜

fi,j image Jaobian entry for the image moment ini

with a movement inj

,whereas both

i

andj

stand forx

,y

,z

,α

,β

,γ

andi 6= j

(undesiredouplings)

J

ω separated Jaobian for rotationalmotion,redued toone degree of free-dom

k

onstant proportionalgaink

a adaptive gaink

proportionalgain fatorK amera alibration matrix as afuntion of the intrinsi ameraparame-

ters

l

k image displaementL Gaussian-blurred image

λ

foallengthλ

e evaluated individualsofλ

-CMAESλ

eig eigenvalueλ

i Lagrange multiplierλ

p ospring ofλ

-CMAESµ

ontrolparameter for Levenberg-Marquardt optimizationµ

(i,j) meanofthetimetoontatvaluesinasegmentwiththerowandolumnposition