ArmWrestling: efficient binary rewriting for ARM

Volltext

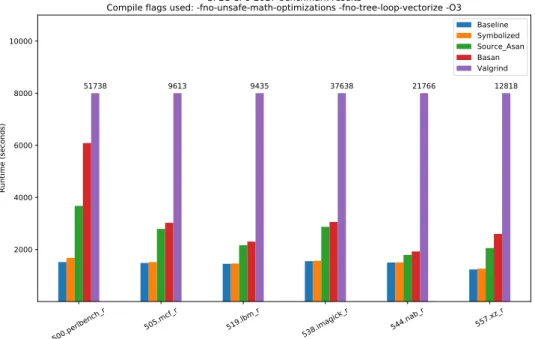

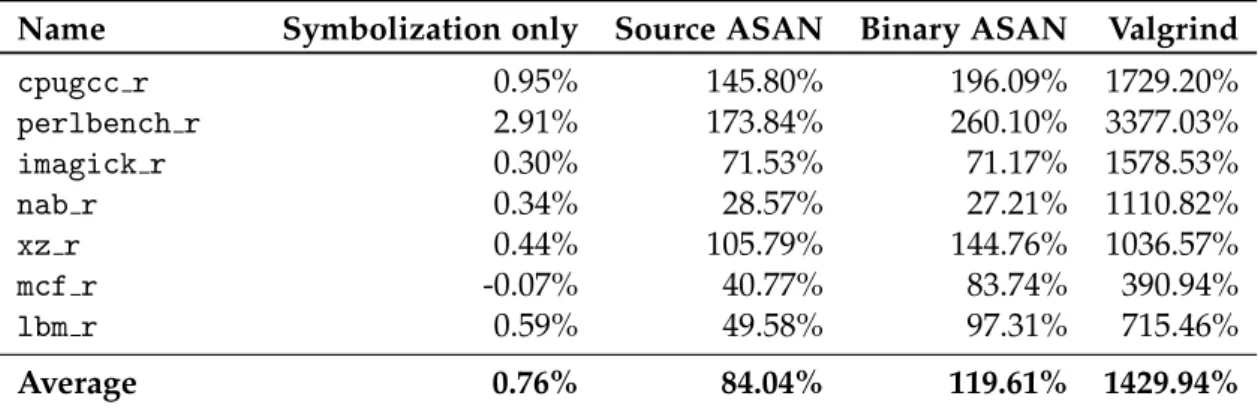

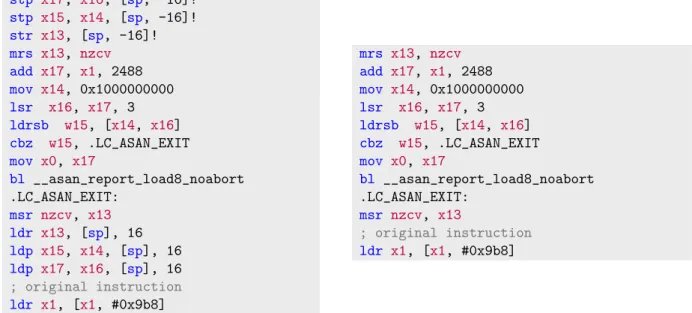

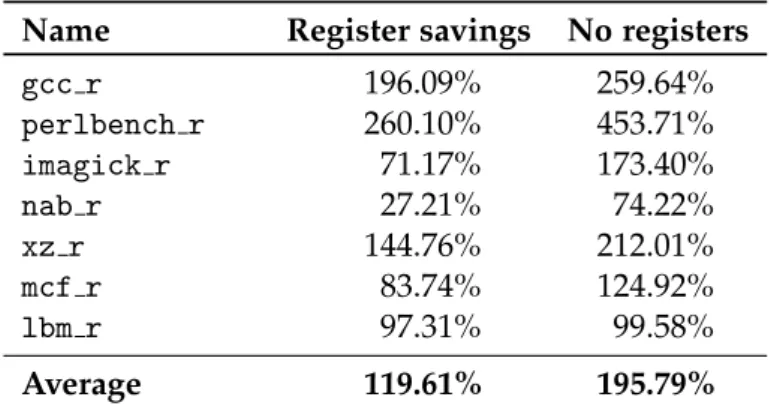

Abbildung

ÄHNLICHE DOKUMENTE

c) Ich bin ursprünglich ein Mann, der dem Himmel gefügig und einem ruhigen Leben ergeben ist. Weil das Mingreich mit den Cahar und den Kalka mich allzu schwer beleidigt, kann ich

Contrary to on-farm sampling schemes, where sample size is determined before the start of the surveillance programme based on the desired confidence level (Schwermer et

This approach separates the ontology (used for query rewriting) from the rest of the data (used for query answering), and it is typical that the latter is stored in a

A set of existential rules ensuring that a finite sound and complete set of most general rewritings exists for any query is called a finite unification set (fus) [BLMS11].. Note

We then define a four step transformation: reasoning within an equivalence class are replaced by explicit equality steps in Section 4, n-ary operators are replaced by binary ones

“The importance of translation cannot be underestimated. It enriches and broadens horizons and thus enhances our world. It helps us to

(1994) studied finite, complete, string rewriting systems for monoids and proved that the existence of such a system presenting a monoid M implies a homotopical

Given a fixed total order on the propositional variables, a BDD can be trans- formed to an Ordered binary decision diagram (OBDD), in which the propositions along all paths occur