Generating Multiply Imputed Synthetic Datasets: Theory and Implementation

Volltext

Abbildung

ÄHNLICHE DOKUMENTE

We compared the effects of both conditions on (1) performance measures, including bar-velocity and force output and; (2) psychological measures, including perceived autonomy,

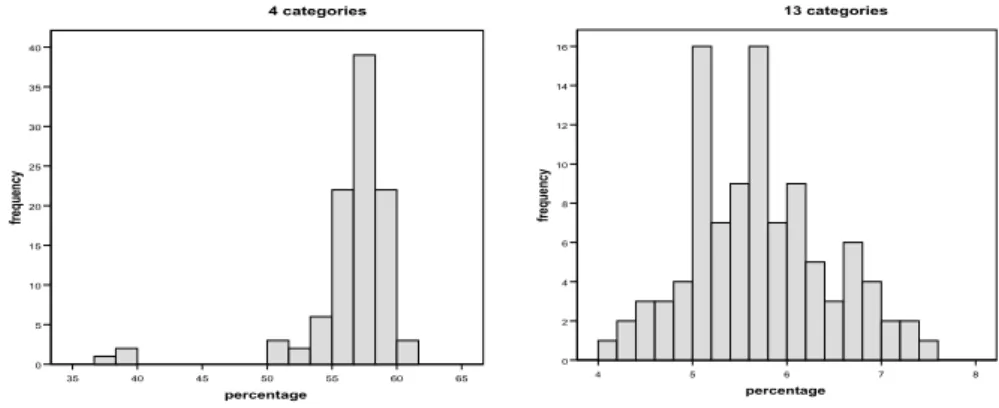

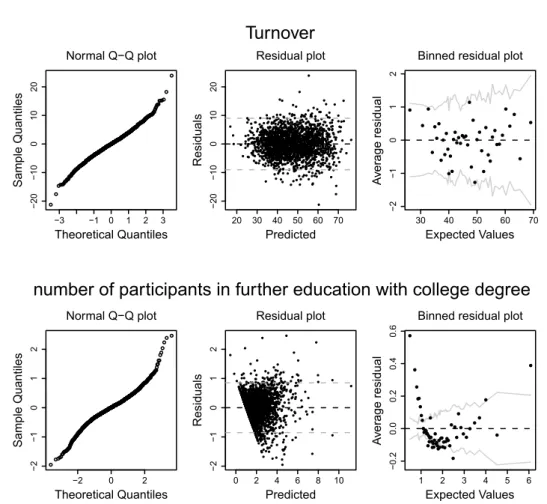

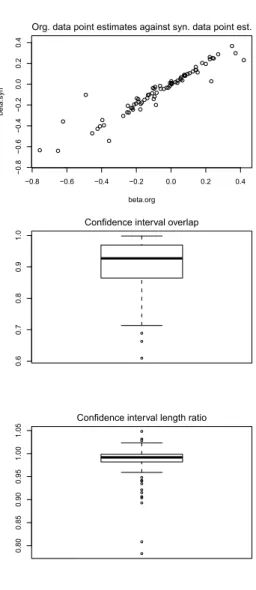

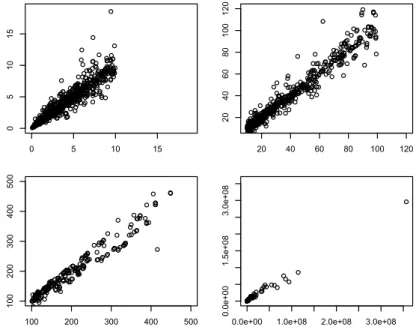

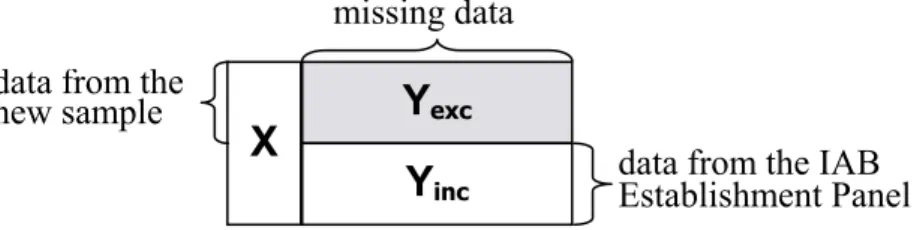

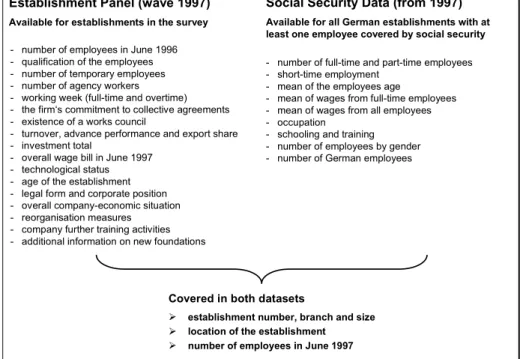

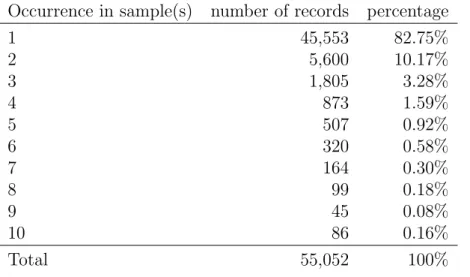

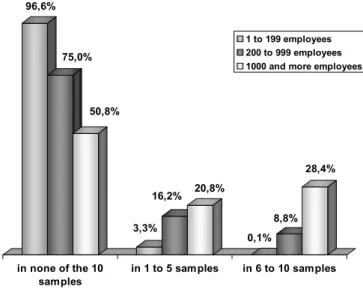

We then introduce our imputation procedures for ordinary and overdispersed count data and present two evaluation studies: The first one generally tests our algorithms’ ability

This article both adds to some of these debates by arguing for the benefits of using informal and unstructured conversations in qualitative research, reiterating the critical

(2012), Items for a description of linguistic competence in the language of schooling necessary for learning/teaching mathematics (end of obliga- tory education) – An approach

local scale: The widespread use of term resilience in the national policy documents is not reflected on local level and is often at odds with the practical understanding

This extension of extrapolation is new: the idea of extending extrapolation to modular inequalities and Banach function spaces first appeared in [57] where the authors and

Imputing a single value for each missing datum and then analyzing the completed data using standard techniques designed for complete data will usually result in standard error

The priming chord creates a tonal reference frame (salience versus pitch of each tone sensation within the chord), and the test chord is perceived relative to that frame.. 5