Knowledge Discovery from CVs: A Topic Modeling Procedure

Volltext

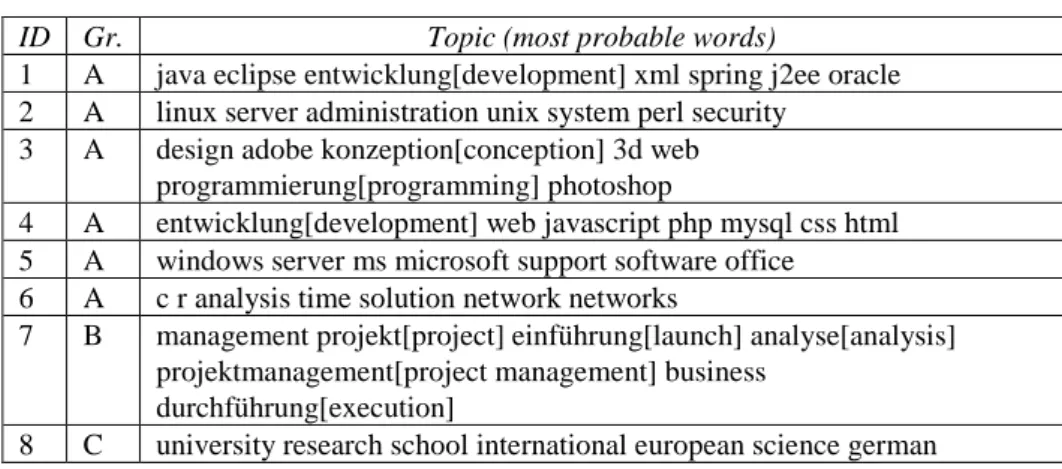

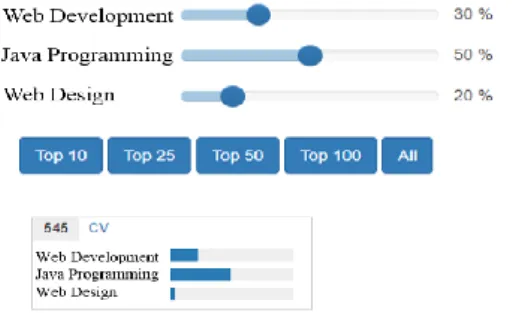

Abbildung

ÄHNLICHE DOKUMENTE

Seetõttu nõustus Jumala Sõna täiuslikuks inimeseks saama, jäädes samal ajal täieliku jumaliku armastuse kandjaks, mis oligi Tema inimeseks kehastumise ainuke

Despite these difficulties, if a reform package is needed to keep the UK in the EU and if this is seen desirable by the remaining Member States, such a process will need to start

Competences for Level 1/Vestibulum: Basic grammar, translation or recherche; questions of comprehension (50 minutes, free quantity of words only for recherche).. Competences

Using a set of real-world OE responses from a market research company, this study explores the potential of three short text topic models for OE responses and compares them to LDA as

The key elements of a growth strategy discussed among Europe’s leaders these days are actually the same as in 1996-97: labour-market reforms, strengthening of the

Keywords: Chief Digital Officer, Smart City Planner, Technical Competences, Transversal Competences, Smart Cities.. 2

Table 5.27.: The average values of the radial crushing strength including the standard deviation and the corresponding pressure of compression of the compacts made of the

In line with the model with the largest influence by Dehaene (1992), it is especially the mathematical competences that are necessary to solve more complex mathematical problems