The proceedings of the BIOSIG 2015 include scientific contributions of the annual conference of the Biometrics Special Interest Group (BIOSIG) of the Gesellschaft für Informatik (GI). The conference took place in Darmstadt, 09.- 11. September 2015. The advances of biometrics research and new develop- ments in the core biometric application field of security have been presented and discussed by international biometrics and security professionals.

ISSN 1617-5468

ISBN 978-3-88579-639-8

Gesellschaft für Informatik e.V. (GI)

publishes this series in order to make available to a broad public recent findings in informatics (i.e. computer science and informa- tion systems), to document conferences that are organized in co- operation with GI and to publish the annual GI Award dissertation.

Broken down into

• seminars

• proceedings

• dissertations

• thematics

current topics are dealt with from the vantage point of research and development, teaching and further training in theory and practice.

The Editorial Committee uses an intensive review process in order to ensure high quality contributions.

The volumes are published in German or English.

Information: http://www.gi.de/service/publikationen/lni/

245

GI-Edition

Lecture Notes in Informatics

Arslan Brömme, Christoph Busch , Christian Rathgeb, Andreas Uhl (Eds.)

BIOSIG 2015

Proceedings of the 14 th International Conference of the Biometrics

Special Interest Group

09.–11. September 2015 Darmstadt, Germany

Proceedings

Ar slan Brömme, Chr istoph Busc h, Chr istian Rathg eb, Andr eas Uhl (Eds.): BIOSIG 2015 - 14

thInter national Conf er ence of the Biometr ics Special Inter est Gr oup

Arslan Brömme, Christoph Busch, Christian Rathgeb, Andreas Uhl (Eds.)

BIOSIG 2015

Proceedings of the 14 th International Conference of the Biometrics Special Interest Group

09.-11. September 2015 in Darmstadt, Germany

Gesellschaft für Informatik e.V. (GI)

Lecture Notes in Informatics (LNI) - Proceedings Series of the Gesellschaft für Informatik (GI) Volume P-245

ISBN 978-3-88579-639-8 ISSN 1617-5468

Volume Editors Arslan Brömme

GI BIOSIG, Gesellschaft für Informatik e.V.

Ahrstraße 45, D-53175 Bonn

Email: arslan.broemme@aviomatik.de

Christoph Busch

Hochschule Darmstadt, CASED Haardtring 100, D-64295 Darmstadt Email: christoph.busch@h-da.de Christian Rathgeb

Hochschule Darmstadt, CASED Haardtring 100, D-64295 Darmstadt Email: christian.rathgeb@h-da.de

Andreas Uhl

University of Salzburg,

Jakob-Haringer Str. 2, A-5020 Salzburg Email: uhl@cosy.sbg.ac.at

Series Editorial Board

Heinrich C. Mayr, Alpen-Adria-Universität Klagenfurt, Austria (Chairman, mayr@ifit.uni-klu.ac.at)

Dieter Fellner, Technische Universität Darmstadt, Germany Ulrich Flegel, Hochschule für Technik, Stuttgart, Germany Ulrich Frank, Universität Duisburg-Essen, Germany

Johann-Christoph Freytag, Humboldt-Universität zu Berlin, Germany Michael Goedicke, Universität Duisburg-Essen, Germany

Ralf Hofestädt, Universität Bielefeld, Germany

Michael Koch, Universität der Bundeswehr München, Germany Axel Lehmann, Universität der Bundeswehr München, Germany Peter Sanders, Karlsruher Institut für Technologie (KIT), Germany Sigrid Schubert, Universität Siegen, Germany

Ingo Timm, Universität Trier, Germany

Karin Vosseberg, Hochschule Bremerhaven, Germany Maria Wimmer, Universität Koblenz-Landau, Germany Dissertations

Steffen Hölldobler, Technische Universität Dresden, Germany Seminars

Reinhard Wilhelm, Universität des Saarlandes, Germany Thematics

Andreas Oberweis, Karlsruher Institut für Technologie (KIT), Germany

Gesellschaft für Informatik, Bonn 2015

printed by Köllen Druck+Verlag GmbH, Bonn

Chairs’ Message

Welcome to the annual international conference of the Biometrics Special Interest Group (BIOSIG) of the Gesellschaft für Informatik (GI) e.V.

GI BIOSIG was founded in 2002 as an experts’ group for the topics of biometric person identification/authentication and electronic signatures and its applications. Over more than a decade the annual conference in strong partnership with the Competence Center for Applied Security Technology (CAST) established a well known forum for biometrics and security professionals from industry, science, representatives of the national gov- ernmental bodies and European institutions who are working in these areas.

The BIOSIG 2015 international conference is jointly organized by the Biometrics Spe- cial Interest Group (BIOSIG) of the Gesellschaft für Informatik e.V., the Competence Center for Applied Security Technology e.V. (CAST), the German Federal Office for Information Security (BSI), the European Association for Biometrics (EAB), the ICT COST Action IC1106, the European Commission Joint Research Centre (JRC), the Tel- eTrusT Deutschland e.V. (TeleTrusT), the Norwegian Biometrics Laboratory (NBL), the Center for Advanced Security Research Darmstadt (CASED), and the Fraunhofer Insti- tute for Computer Graphics Research (IGD). This years’ international conference BIOSIG 2015 is again technically co-sponsored by the Institute of Electrical and Elec- tronics Engineers (IEEE) and is enriched with satellite workshops by the TeleTrust Bio- metric Working Group and the European Association for Biometrics.

The international program committee accepted full scientific papers strongly according to the LNI guidelines (acceptance rate ~22%) within a scientific double-blinded review process of at minimum five reviews per paper. All papers were formally restricted for the printed proceedings to 12 pages for regular research contributions including an oral presentation and 8 pages for further conference contributions including a poster presen- tation at the conference site.

Furthermore, the program committee has created a program including selected contribu- tions of strong interest (further conference contributions) for the outlined scope of this conference. All paper contributions for BIOSIG 2015 will be published additionally in the IEEE Xplore Digital Library.

We would like to thank all authors for their contributions and the numerous reviewers for their work in the program committee.

Darmstadt, 09 th September 2015

Arslan Brömme GI BIOSIG, GI e.V.

Christoph Busch Hochschule Darmstadt

Christian Rathgeb Hochschule Darmstadt

Andreas Uhl

University of

Salzburg

Chairs

Arslan Brömme, GI BIOSIG, GI e.V., Bonn, Germany Christoph Busch, Hochschule Darmstadt - CASED, Germany Christian Rathgeb, Hochschule Darmstadt - CASED, Germany Andreas Uhl, University of Salzburg, Austria

Program Committee Harald Baier (CASED, DE) Oliver Bausinger (BSI, DE) Thiriamchos Bourlai (WVU, US) Patrick Bours (GUC, NO)

Sebastien Brangoulo (Morpho, FR) Ralph Breithaupt (BSI, DE) Julien Bringer (Morpho, FR) Arslan Brömme (GI/BIOSIG, DE) Christoph Busch (CAST-Forum, DE) Victor-Philipp Busch (Sybuca, DE) Patrizio Campisi (Uni Roma 3, IT) Nathan Clarke (CSCAN, UK) Paul Lobato Correira (LXIT, PT) Adam Czajka (NASK,PL) Farzin Deravi (UKE, UK) Martin Drahansky (BUT, CZ) Andrzej Drygajlo (EPFL, CH) Julian Fierrez (UAM, ES)

Simone Fischer-Hübner (KAU, SE) Lothar Fritsch (NR, NO)

Steven Furnell (CSCAN, UK) Sonia Garcia (TSP, FR) Patrick Grother (NIST, US) Olaf Henniger (Fhg IGD, DE) Detlef Hühnlein (ecsec, DE) Robert W. Ives (USNA, US) Christiane Kaplan (softpro, DE) Stefan Katzenbeisser (CASED, DE) Tom Kevenaar (GenKey, NL)

Didier Meuwly (NFI, NL) Emilio Mordini (CSSC, IT) Elaine Newton (NIST, US) Mark Nixon (UoS, UK)

Alexander Nouak (Fhg IGD, DE) Markus Nuppeney (BSI, DE) Hisao Ogata (Hitachi, JP) Martin Olsen (GUC, NO) Javier Ortega-Garcia (UAM, ES) Michael Peirce (Daon, IR) Dijana Petrovska (TSP, FR) Anika Pflug (CASED, DE) Ioannis Pitas (AUT, GR) Fernando Podio (NIST, US) Raghu Ramachandra (GUC, NO) Kai Rannenberg (Uni FFM, DE) Christian Rathgeb (CASED, DE) Arun Ross (MSU, US)

Heiko Roßnagel (Fhg IAO, DE)

Raul Sanchez-Reillo (UC3M, ES)

Stephanie Schuckers (ClU, US)

Günter Schumacher (JRC, IT)

Takashi Shinzaki (Fujitsu, JP)

Max Snijder (EAB, NL)

Luis Soares (ISCTE-IUL, PT)

Luuk Spreeuwers (UTW, NL)

Elham Tabassi (NIST, US)

Tieniu Tan (NLPR, CN)

Massimo Tistarelli (UNISS, IT)

Ulrike Korte (BSI, DE) Bernd Kowalski (BSI, DE) Ajay Kumar (Poly, HK) Herbert Leitold (a-sit, AT) Guoqiang Li (GUC, NO) Stan Li (CBSR, CN)

Paulo Lobato Correira (IST, PT) Davide Maltoni (UBO, IT) Johannes Merkle (secunet, DE)

Dimitrios Tzovaras (CfRaT, GR) Andreas Uhl (COSY, AT) Markus Ullmann (BSI, DE) Raymond Veldhuis (UTW, NL) Anne Wang (Cogent, US) Jim Wayman (SJSU, US) Peter Wild (UoR, UK) Andreas Wolf (BDR, DE) Bian Yang (GUC, NO)

Hosts

Biometrics Special Interest Group (BIOSIG) of the Gesellschaft für Informatik (GI) e.V.

http://www.biosig.org

Competence Center for Applied Security Technology e.V. (CAST) http://www.cast-forum.de

Bundesamt für Sicherheit in der Informationstechnik (BSI) http://www.bsi.bund.de

European Association for Biometrics (EAB) http://www.eab.org

European Commission Joint Research Centre (JRC) http://ec.europa.eu/dgs/jrc/index.cfm

TeleTrusT Deutschland e.V (TeleTrust) http://www.teletrust.de

Norwegian Biometrics Laboratory (NBL) http://www.nislab.no/biometrics_lab

Center for Advanced Security Research Darmstadt (CASED) http://www.cased.de

Fraunhofer-Institut für Graphische Datenverarbeitung (IGD)

http://www.igd.fraunhofer.de

BIOSIG 2015 – Biometrics Special Interest Group

“2015 International Conference of the Biometrics Special Interest Group”

09 th -11 th September 2015

Biometrics provides efficient and reliable solutions to recognize individuals. With in- creasing number of identity theft and misuse incidents we do observe a significant fraud in e-commerce and thus growing interests on trustworthiness of person authentication.

Nowadays we find biometric applications in areas like border control, national ID cards, e-banking, e-commerce, e-health etc. Large-scale applications such as the European Union Visa Information System (VIS) and Unique Identification (UID) in India require high accuracy and also reliability, interoperability, scalability, system reliability and usability. Many of these are joint requirements also for forensic applications.

Multimodal biometrics combined with fusion techniques can improve recognition per- formance. Efficient searching or indexing methods can accelerate identification effi- ciency. Additionally, quality of captured biometric samples can strongly influence the performance.

Moreover, mobile biometrics is an emerging area and biometrics based smartphones can support deployment and acceptance of biometric systems. However concerns about secu- rity and privacy cannot be neglected. The relevant techniques in the area of presentation attack detection (liveness detection) and template protection are about to supplement biometric systems, in order to improve fake resistance, prevent potential attacks such as cross matching, identity theft etc.

BIOSIG 2015 offers you once again a platform for international experts’ discussions on

biometrics research and the full range of security applications.

Table of Contents

BIOSIG 2015 – Regular Research Papers ……….…... 13 Karl Ricanek Jr., Shivani Bhardwaj, Michael Sodomsky

A Review of Face Recognition against Longitudinal Child Faces………...

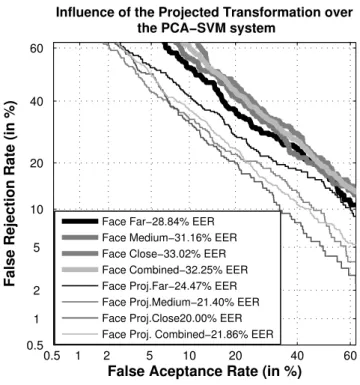

Ester Gonzalez-Sosa, Ruben Vera-Rodriguez, Julian Fierrez, Pedro Tome, Javier Ortega-Garcia

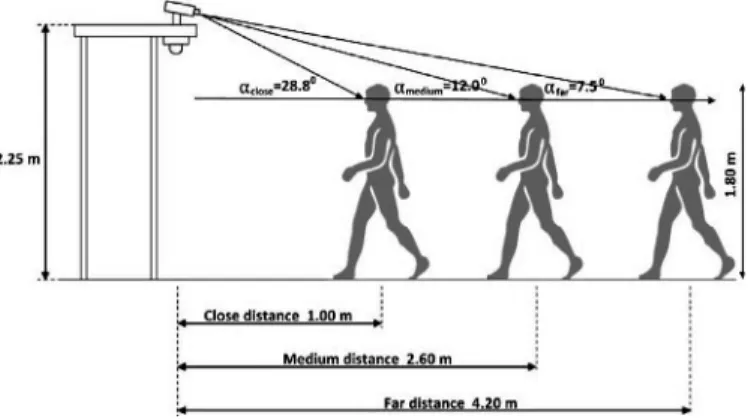

Pose Variability Compensation Using Projective Transformation Forensic Face Recognition………..………..…………...……….…...

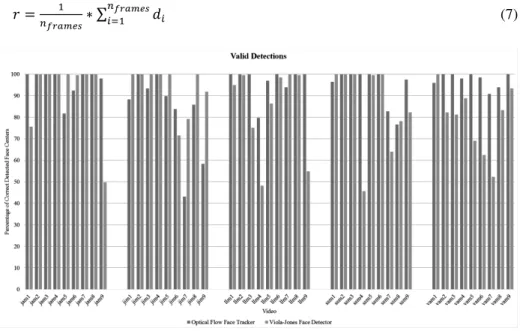

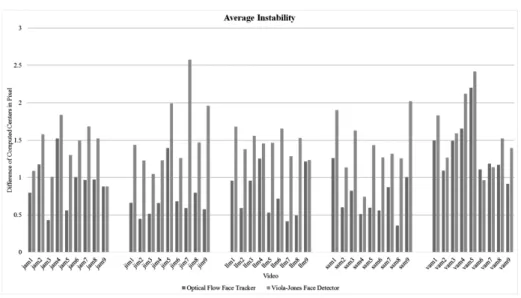

Andreas Ranftl, Fernando Alonso-Fernandez, Stefan Karlsson

Face Tracking using Optical Flow Development of a Real-Time AdaBoost Cascade Face Tracker……….………...

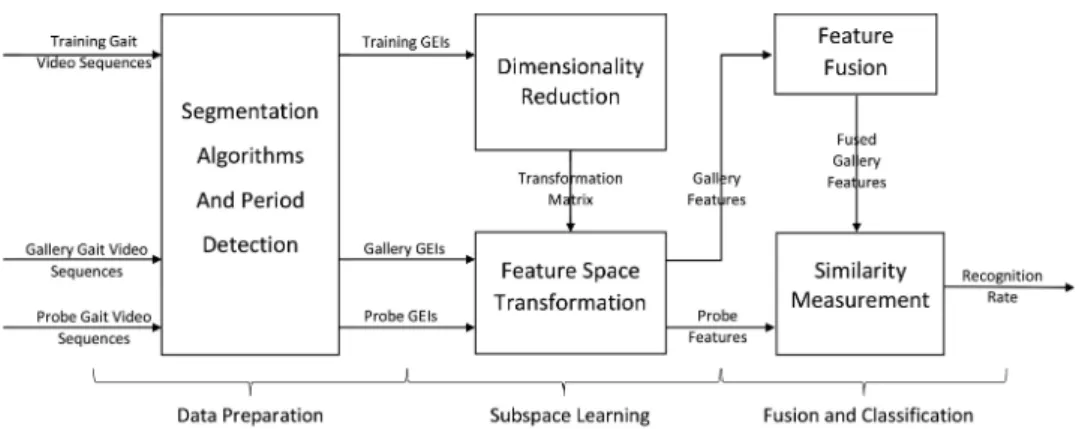

Ning Jia, Victor Sanchez, Chang-Tsun Li, Hassan Mansour

On Reducing the Effect of Silhouette Quality on Individual Gait Recognition:

a Feature Fusion Approach………...………...

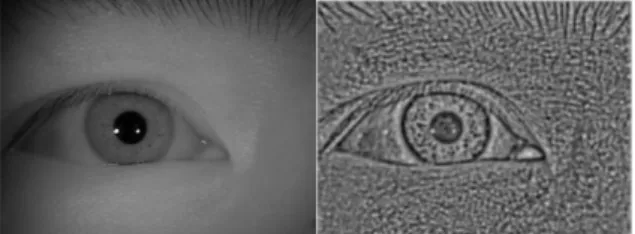

Peter Wild, Heinz Hofbauer, James Ferryman, Andreas Uhl

Segmentation-level Fusion for Iris Recogntion……….…...

Michael Happold

Structured Forest Edge Detectors for Improved Eyelid and Iris Segmentation..…...

Martin Aastrup Olsen, Martin Böckeler, Christoph Busch

Predicting Dactyloscopic Examiner Fingerprint Image Quality Assessments...…...

Johannes Kotzerke, Stephen A. Davis, Robert Hayes, Luuk J. Spreeuwers, Raymond N.J. Veldhuis, Kathy J. Horadam

Identification performance of evidential value estimation for fingermarks...…...

Jesse Hartloff, Avradip Mandal, Arnab Roy

Privacy Preserving Technique for Set-Based Biometric Authentication using Reed-Solomon Decoding……….………...…...

Benjamin Tams, Johannes Merkle, Christian Rathgeb, Johannes Wagner, Ulrike Korte, Christoph Busch

Improved Fuzzy Vault Scheme for Alignment-Free Fingerprint Features……...…...

Edlira Martiri, Bian Yang, Christoph Busch

Protected Honey Face Templates……….……...…...

Alexandre Sierro, Pierre Ferrez, Pierre Roduit

Contact-less Palm/Finger Vein Biometric………...…...

15

27

39

49

61

73

85

97

109

121

133

145

Guoqiang Li, Bian Yang, Christoph Busch

A Fingerprint Indexing Scheme with Robustness against Sample Translation and Rotation………..…...…...

Kribashnee Dorasamy, Leandra Webb, Jules Tapamo

Evaluating the Change in Fingerprint Directional Patterns under Variation of Rotation and Number of Regions.……….………....

BIOSIG 2015 – Further Conference Contributions………

Christof Kauba, Andreas Uhl

Robustness Evaluation of Hand Vein Recognition Systems………...…...

Nahuel González, Enrique P. Calot

Finite Context Modeling of Keystroke Dynamics in Free Text...…...

Heinz Hofbauer, Christian Rathgeb, Johannes Wagner, Andreas Uhl, Christoph Busch

Investigation of Better Portable Graphics Compression for Iris Biometric

Recognition………...…...

Nalla Pattabhi Ramaiah, Nalla Srilatha, Chalavadi Krishna Mohan

Sparsity-based Iris Classification using Iris Fiber Structures ………...…...

Pedro Tome and Sébastien Marcel

Palm Vein Database and Experimental Framework for Reproducible Research...

Michael Fairhurst, Meryem Erbilek, Marjory Da Costa-Abreu

Exploring Gender Prediction from Iris Biometrics………...

Dominik Klein, Jan Kruse

A Comparative Study on Image Hashing for Document Authentication…………...

Alaa Darabseh, Akbar Siami Namin

On Accuracy of Keystroke Authentications Based on Commonly Used English Words…………...

Christof Jonietz, Eduardo Monari, Chengchao Qu

Towards Touchless Palm and Finger Detection for Fingerprint Extraction with Mobile Devices………...

Naser Damer, Alexander Nouak

Weighted Integration of Neighbors Distance Ratio in Multi-biometric Fusion...

157

169

181

183

191

199

207

215

223

231

239

247

255

Soumik Mondal, Patrick Bours

Does Context matter for the Performance of Continuous Authentication Biometric Systems? An Empirical Study on Mobile Devices...

Thomas Klir

Fingerprint Image Enhancement with easy to use algorithms…….…………...

Lisa de Wilde, Luuk Spreeuwers, Raymond Veldhuis

Exploring How User Routine Affects the Recognition Performance of a Lock Pattern……….………...

Thomas Herzog, Andreas Uhl

JPEG Optimisation for Fingerprint Recognition: Generalisation Potential of an Evolutionary Approach ………..……….………...

Nassima Kihal, Arnaud Polette, Salim Chitroub, Isabelle Brunette, Jean Meunier

Corneal Topography: An Emerging Biometric System for Person Authentication....

Rig Das, Emanuele Maiorana, Daria La Rocca, Patrizio Campisi

EEG Biometrics for User Recognition using Visually Evoked Potentials…………....

Maximilian Krieg, Nils Rogmann

Liveness Detectionin Biometrics……….…………...

M. Hamed Izadi, Andrzej Drygajlo

Discarding low quality Minutia Cylinder-Code pairs for improved fingerprint comparison……….……….…………...

Christian Kahindo, Sonia Garcia-Salicetti, Nesma Houmani

A Signature Complexity Measure to select Reference Signatures for Online Signature Verification………..……….…………...

263

271

279

287

295

303

311

319

327

BIOSIG 2015

Regular Research Papers

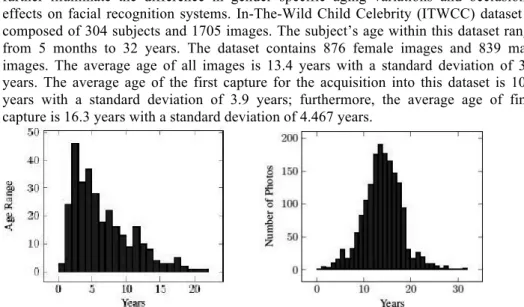

A Review of Face Recognition against Longitudinal Child Faces

Karl Ricanek Jr., Ph.D. Senior Member IEEE, Shivani Bhardwaj, & Michael Sodomsky I3S Institute – Face Aging Group

University of North Carolina Wilmington 601 South College Road

28403 Wilmington ricanekk@uncw.edu

sb2534@uncw.edu

Abstract: It is an established fact that the face-based biometric system performance is affected by the variation that is caused as a result of aging;

however, the question has not been adequately investigated for non-adults, i.e.

children from birth to adulthood. The majority of research and development in automated face recognition has been focused on adults. The objective of this paper is to establish an understanding of face recognition against non-adults. This work develops a publicly available longitudinal child face database of child celebrities from images in the wild (ITWCC). This work explores the challenges of biological changes due to maturation, i.e. the face grows longer and wider, the nose expands, the lips widen, etc, i.e. craniofacial morphology, and examines the impact on face recognition. The systems chosen are: Cognitec’s FaceVacs 8.3, Open Source Biometric Recognition (SF4), principal component analysis (PCA), linear discriminant analysis (LDA), local region principal component analysis (LRPCA), and cohort linear discriminant analysis. Face matchers recorded low performance:

top performance in verification is 37% TAR at 1% FAR and best rank-1 identification reached 25% recognition rate on a gallery of 301 subjects.

1. Introduction

The human face is an important feature of identity recognition. The characteristics of the

face that makes it a desirable biometric modality is its uniqueness, universality,

acceptability, semi-permanence, and easy collectability [RB11]. Because of its potential

and possible variety of application, automated face recognition has received a lot of

attention over the last two decades. Face recognition can be accomplished from a

distance and via non-contact acquisition, which offer an added advantage over most

biometric systems and make it more suitable for security and surveillance systems. Face

recognition, may play a vital role in identifying children that go missing and in extensive

range of access control and monitoring systems, especially to safeguard children. This

technology can provide a whole new approach to protect and support latched-key kid

and to provide access control for various internet of things across different age groups. It

can be used to protect the non-adult population from predators and illegitimate web

contents.

Face recognition is a challenging problem, and a great deal of work has been completed for pose correction, illumination variation, and expression to support face recognition in the wild. However, the majority of the work done has been focused on adults and deals with the dynamics of mature faces. The objective of this paper is to review the current state of facial recognition algorithms with a focus on non-adult stages of growth and development, 2 years to 16 years.

Aging with respect to facial recognition system includes variation in shape, size and texture of the face. These temporal changes will cause performance degradation. Hence, state issued id’s, e.g. driving license, has to be renewed every 5-10 years. Mathew Turk stated that “developing a computational model of face recognition is quite difficult, because faces are complex, multidimensional, and meaningful visual stimuli” [TP91];

however, when aging information is added to this problem, it becomes infinitely more difficult. The most challenging problem in developing a solution for childhood face recognition is the formation of a useful dataset. This work addresses this primary concern.

Contributions

This paper provides the following contribution to the research community: 1. provides the baseline for face recognition performance for children against a suite of traditional face recognition techniques and investigate the impact of well-defined structural (skeletal) changes of the face on a suite of FR techniques; 2. establishes the first moderate scale publicly available child face database focused across the growth and development period1, which is one of the key issue in evaluation; and, 3. provides a methodology framework for investigating the problem of face recognition across childhood.

2. Background

Facial recognition is a complex topic that has been researched very heavily and many attempts have been made to understand the effects aging has on facial recognition systems. However, algorithm performance with respect to human aging: as a subject of the growth and development phase of childhood, has just begun to be fully explored by researchers. One of the biggest issues is the vast amount of data that is required to fully understand the human face and its maturation process. As the face changes over time, the ability to recognize the person becomes more challenging. This is further exacerbated if the person under inspection is not known to the observer.

Anthropological and forensic studies have contributed significantly to show that age related changes of non-adults are different from face aging for adults. Human aging can be studied as a two staged process: first involves the growth and development phase and

1