LS{8Rep ort 4

Siegfried Bell and SteoWeb er

Informatik VI I I

University ofDortmund

D-44221 Dortmund

email: b ell@ls8.informatik.uni-dortmund.de

Dortmund,July 12,1993

1

Thisrep ortisanextendedversionofthepresentationOnthecloselogicalrelationshipbetweenFOIL

and the frameworks of Helft and Plotkin given at the 3rd International Workshop of Inductive Logic

Inductive LogicProgramming (ILP)is closely related toLogic Programming(LP) by

the name. We extract the basic dierences of ILP and LP by comparing b oth and give

denitions ofthebasic assumptions oftheir paradigms, e.g. closed worldassumption,the

op en domainassumptionand theop en world assumptionused in ILP.

We then dene a three{valued semantic of ILP and p oint out relations b etween our

semanticandtheframeworkofPlotkin,[Plotkin,1971],andofHelft,[Helft,1989]. Finally,

we show how FOIL, [Quinlan, 1990] ts in ourwork and we compare oursemantic with

other three-valued logics.

1 Intro duction 2

2 Logic Programming and Inductive Logic Programming 2

3 A logical framework 6

3.1 Assumptions in ILP : : : : : : : : : : : : : : : : : : : : : : : : : : : : : : : 6

3.2 A partial Herbrandinterpretation: : : : : : : : : : : : : : : : : : : : : : : : 8

3.3 A semantic of aprogram P : : : : : : : : : : : : : : : : : : : : : : : : : : : 9

3.4 Valid hyp otheses : : : : : : : : : : : : : : : : : : : : : : : : : : : : : : : : : 10

3.5 Summary : : : : : : : : : : : : : : : : : : : : : : : : : : : : : : : : : : : : : 12

4 Relations 12

4.1 Helft: inductionasnonmontonic inference : : : : : : : : : : : : : : : : : : : 12

4.2 FOIL in a logicalframework : : : : : : : : : : : : : : : : : : : : : : : : : : : 13

4.3 Plotkin's inductive task : : : : : : : : : : : : : : : : : : : : : : : : : : : : : 15

4.4 Comparisonwith otherthree{valued logics: : : : : : : : : : : : : : : : : : : 15

5 Conclusions and further works 16

1 Introduction

The relationship of logic, control and algorithms can b e expressed symb olically by the

equation: Algorithm = Logic + Control, [Kowalski,1979]. Kowalski used this equation

to dene that logic programs express the logical comp onent of algorithmsand logic pro-

gramming (LP) is thecorresp onding research area,[Apt and van Emden, 1982]. Further

Kowalskip ointedoutthatLP isclosely relatedtotheclosed worldassumption(CWA)in

contrasttothe op enworldassumption (OWA)in classical logic, [Kowalski,1989].

Inductive Logic Programming isa new area of research,intro duced and describ ed by

S. Muggleton, [Muggleton,1990], as the intersection of logic programming and machine

learning. We give some understandings of this description, b ecause there is no unique

view. FirstILP can b eundersto o dasprogramsynthesis(P.Brazdil), secondas aninduc-

tive metho dforprogrammers(K.Morik 1

)todevelopand verifyprogramsortorepresent

knowledgeand third ourunderstanding: We emphasize thewordslogic programmingand

understand ILP asthe intersection of theresearch areasof inductive logic and logic pro-

gramming. Inthefollowing wewill describ e ILPfrom this p oint of view.

In Inductive Logic Programming there are two main frameworks, and they are pro-

p osed by [Plotkin,1971] and [Helft,1989]. These frameworks dier in the underlying

assumptions; what they consider false, and what they consider to b e still unknown.

We characterize the rst one with the OWA. Examples of this framework are CLINT

[DeRaedt andBruyno oghe, 1989], GOLEM[Muggletonand Feng, 1992]and RDT

[Kietz and Wrob el, 1991]. FOIL [Quinlan, 1990] can b e used in two mo des. First it can

b eusedregardingtheOWA.Second,FOIL canb eusedregarding theCWA.Withresp ect

to this mo de, it is an example of Helft's framework. But neither Helft norQuinlan give

formaldenitions oftheir CWA orOWA.

Inthefollowing weargue,thattheCWAin ILPcannotthesameastheCWAin logic

programming. Therefore,we need a new denitionof theCWA in ILP (CWA

ILP

). Also

wedene, whatwe mean by a op en domainassumption (ODA)and theOWA.We then

formalizethesesettingswithamo delbasedthree{valuedinterpretationofformulasin ILP

takingintoconsiderationthewellknowneldoflogic programming. Finallyweshowthat

this framework incorp orates Plotkin's settings of inductive inference, that it is a logical

description ofFOIL and we compareourlogic withother three{valued logics.

2 Logic Programming and Inductive Logic Programming

In thissection we comparethesemanticsof logic programming(LP)and Inductive Logic

Programming(ILP) toworkout thedierences b etween these two paradigms.

Table1containsthelogic program1whichshouldrepresent 4elementsandstu. But

whatdo wemean,if we write such a logic program. K. Apt, [Apt,1990], argued thatwe

meaninlogic programmingthatwaterisan element,:::andmudisnotan element,water

is nostu :::Thismeaningis alsolisted in Table1.

Shepherdson, [Shepherdson, 1987], claimed, that we want to deduce from the fact

son(bill,joe,jane) and the parent{relation given by the logic program 2 in table 1 the

negative information :son(bil l ;har r y;maude) and further :par ent(har r y;maude;bil l).

1

Logic Program 1:

element(re). element(air). element(water). element(earth). stu(mud).

Meaning:

: element(mud). :stu(water). :stu(earth). :stu(air).stu(mud).

: stu(re). element(re). element(air). element(water). element(earth).

Logic Program 2:

parents(Father,Mother,Child) :-son(Child, Father, Mother).

parents(Father,Mother,Child) :-daughter(Child,Father,Mother).

son(bill,jo e,jane). daughter(sue,harold,maude).

Meaning A:

parents(Father,Mother,Child) son(Child, Father, Mother).

parents(Father,Mother,Child) daughter(Child, Father,Mother).

Meaning B:

parents(Father,Mother,Child) $

son(Child, Father,Mother)_ daughter(Child,Father,Mother).

:son(bill,harry,maude).

Table 1: Logic Programming

This means we should interpret the logic program 2 as given by Meaning B. Another

reading is the interpretation of rules as premises, material implication and conclusion,

Meaning A. This reading would not p ermit todeduce the negative information, b ecause

we canconclude from afalse premise everything. So,wemean withlogic program2,that

theparent{relationisdenedbytheson{andthedaughter{predicateortheparent{relation

isequivalent toson{or daughter{relation.

Kowalski,[Kowalski,1989],relatedthisinterpretationsof logicprogramstotheclosed

world assumption in contrast to the op en world assumption. Rules of logic programs

should b einterpreted as if-then-and-only-if{denitions regarding theCWA. The if-then-

and-only-if-reading reects ourMeaning B in table 1. He dened a static and adynamic

management of a data base dep ending up on theform of denitions: whether data (facts

orrules)is dened bymeansof complete if-then-and-only-if{denitions orbyonly means

of the if{halves, whether the only{if{half of an if-then-and-only-if{denitions is stated

explicitly orisassumedimplicitly, andwhether theonly-ifassumptionisundersto o d asa

statement of theobjectlanguage oras astatementof themeta-language.

The meaningoflogicprogramswasformally capturedbytheclosed worldassumption

[Reiter, 1978]. Because this solution has some computational problems, Clark prop osed

the Negation As Failure - rule, [Clark,1978], whereas Apt and van Emden describ ed

semantics based on least xp oints, [Apt andvan Emden,1982]. This corresp onds to a

We say:

BackgroundKnowledge p(c),p(a),p(b) incompleteset

and Positive Examples: p(a)! q(a) offormulas

p(b)! q(b)

Negative Examples: q(d)

We mean

Hyp othesis: p(X)! q(X)

Prediction: q(c)

Table 2: Inductive Logic Programming

is true whatis given and all is false, what is not given. Shepherdson summarizes in the

helpful motto: Wemean, what we say and nothing more.

Denition 1 (Closed World Assumption,CWA

LP

) LetPbea logicprogram. Then

dene

CWA

LP

(X):=P [f:A:P 6k Ag

Aless restrictiveinterpretationisusedinILPin whichwemeanmore,as whatwe say

regarding Shepherdson, orwe have a dynamicrepresentationregarding Kowalski.

Following theframeworkof [Plotkin,1971],the inductive learning taskin ILP can b e

describ ed as using a background theory B, a set of p ositive examples E +

and a set of

negative examples E . The desired output is a hyp othesis which describ es the p ositive

facts w.r.t. the background knowledge and no negative facts. Plotkin has proven, that

there is no hyp othesis which covers exactly the p ositive examples and no other fact. In

general, we can deducefrom ahyp othesis,facts which arenotknown. Letus call this set

of factsprediction.

Orin anothersense, in learning we complete an incomplete set of data with thepre-

diction. Table 2 shows such an incomplete set of formulas from which we want to infer

a complete set of formulas. Again we distinguish in whatwe sayand what we mean. In

a semantical view,wewant toinfer an interpretationin which the prediction is satised.

We dothisbyadding thehyp othesisp(X)!q(X)whichdescrib esourintendedinterpre-

tation and satises q(c). So, we see the learning taskas toinfer a correct interpretation

withresp ect toan incomplete setof formulas.

Manylearningsystemsmakesp ecialassumptionsin ordertoinferthecorrectinterpre-

tation. Forexample FOIL [Quinlan, 1990]usestwoassumptions, CWAand ODA.Letus

recall how FOIL can b e used:

1. A setof p ositive examples E +

ispresented tothesystem.

2. ThesetE willb egeneratedaccordingtotheclosedworldassumption(CWA)with

resp ect totheminimal signatureofE +

.

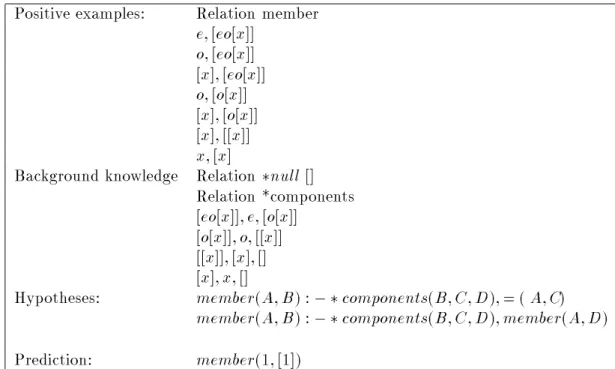

Positive examples: Relation memb er

e;[eo[x]]

o;[eo[x]]

[x];[eo[x]]

o;[o[x]]

[x];[o[x]]

[x];[[x]]

x;[x]

Backgroundknowledge Relation nul l[]

Relation *comp onents

[eo[x]];e;[o[x]]

[o[x]];o;[[x]]

[[x]];[x];[]

[x];x;[]

Hyp otheses: member (A;B): components(B;C ;D );=(A;C)

member (A;B): components(B;C ;D );member (A;D )

Prediction: member (1;[1])

Table 3: FOIL learns memb er

4. ThepredictionisnotaectedbytheCWAaccordingtotheop endomainassumption

(ODA).

Table 3 shows p ositive examples ofthe predicate memberand backgroundknowledge

in the form of the predicates null and components. FOIL learns two hyp otheses which

describ esthep ositiveexamplesanddo esnotdescrib ethenegativeexamples. Thenegative

examples are like nul l [x]and are generated bythe CWA. The prediction is for example

member (1;[1]), which is not aected by the CWA, although they are not mentioned as

p ositive examples.

First, we cannot use the CWA as dened by [Lloyd, 1987] in logic programming 2

.

Lloyd and others relate the CWA to the domain closure assumption DCA, whereas we

need a CWAwhich is relatedtotheODA.

Hence CWA

LP

is not equal to CWA

ILP

, but then we have to ask what is the for-

mal denition of the CWA

ILP

or why is theprediction notinuenced bythe CWA

ILP .

Obviously, thedenition of theCWA

ILP

mustallow forprediction.

One solution was presentedby Helft, [Helft,1989]. He relates the CWA tothe Op en

Domain Assumption(ODA).Helft prop oses thatonly a subsetof theformulas according

a given signature should b e inuenced by the CWA

ILP

. Table 4 shows an example of

Helft'sframeworkwithangivenlogicprogram. Heconstructsregardingthelogicprogram

aminimalmo delandnds thenhyp otheseswhichhavetob evalidin thisminimalmo del.

2

TheCWAdeterminesauniquemo del,b ecauseequalityaxioms,freenessaxiomsandthedomainclosure

axioms restrictsthedomain togroundterms,andthesetofformulasandthe completiondetermines the

Logic Program:

deputy(tom). deputy(x) ! corrupt(x).

rich(tom). rich(bill).

Minimal Mo del

deputy(tom),corrupt(tom),rich(tom),rich(bill)

Hyp otheses:

1

: rich(x) deputy(x)

2

: rich(x) corrupt(x)

3

: corrupt(x) rich(x)

I

CWA j=

1

;

2

Table4: Exampleof Helft

Intheexample aretherules

1 and

2

valid. Finallyhe appliesthehyp otheses regarding

totheODA.

But Helft do es not give any formal denition of the CWA

ILP

and the ODA. We

give in thenext section denitions of thesesassumptions based on athree-valued seman-

tic. Three{valued semantics arewidely used in logic programming, see [Fitting,1985]or

[Shepherdson, 1987],butwecannottransfertheirtechniquesidentically. Inlogicprogram-

ming three-valued semantics are motivated by the observation, that each logic program

succeeds, fails or go on forever. So it is natural to use three values in the semantics of

logic programs.

In ILP we have a similar case: each clause is true, false or unknown, b ecause the

prediction is aset of prop osition whichwe notknow. Theyaremayb e trueorfalse.

Inthewordsof Kowalski,[Kowalski,1989],logic programsordatabases havea static

representationwhereasin ILP, setsofformulas shouldb e havea dynamic representation.

This seems very natural, b ecause in learning it should b e p ossible to have anything to

learn which iscurrently unknownand laterknown.

3 A logical framework

Inthissectionweformalizeourlogicalframework. Wedenethetermsminimalsignature

and the three assumptions CWA

ILP

, O WA and O D A . We then give a semantic of

logic programsregarding ILPbased on partialHerbrandinterpretations and describ e the

validityofhyp otheses. Forlogicaldenitionsofrstorderlanguagewereferto[Apt,1990].

We restrict ourselvestothelanguagesof Horn clause logic which aremainly usedin ILP.

Anyundened expressions can b efound in [Lloyd, 1987]or[Apt,1990].

3.1 Assumptions in ILP

In the following, using = fPS;FS;Vg we denote a signature where PS is a set of

usual. TERM() is dened as the set of terms. A signature is the sp ecic part of a

language.

Let us dene whatismeant bythetermminimalgenerating signature.

Denition 2 (Generating signature) Let=fPS;FS;Vg bea signature,X asetof

formulas. We say that generatesX, i for every A2X,it holdsthat A2FORM().

Denition 3 (Minimal generating signature) Let=fPS;FS;Vg,

0

=fPS 0

;FS 0

;V 0

g betwo signatures andX a set of formulas. We write 0

,

i PSPS 0

,FS FS 0

. We say that is a minimalsignaturew.r.t. X,i

1. generatesX

2. for every generatingsignatureof X, isminimalunder .

The p oint is, that theprediction should notb e aected bythe CWA.Thus, thecom-

pletion of thegiven setof formulasis only done w.r.t. theminimal signature. Intable

3 we generalize the p ositive and negative examples to the hyp otheses ( member (A;B) :

components(B;C ;D );:::)which isalso valid fornumb ers, e.g. member (1;[1]).

The idea is, that if we have a mo del M for the given incomplete set of formulas,

then there is a mo del M 0

for the generalization. Clearly, M is not necessarily a mo del

of theses generalized formulas b ecause M may not interpret all instantiations of these

formulas. Hence,M isonlyapartialinterpretation. Butpartialinterpretationsareclosely

connectedwitha thirdtruth-value, b ecauseone canregardtheuninterpretedformulasas

formulas which havethe truth-value undened. Whathapp ens within the generalization

stepisthatformulaswhichhavethetruth-valueunknownareassignedthevaluetrue. And

theODA p ermits thatvalid formulasremains valid.

Let us put these ideas intoa morepreciseform. According tothewayhow FOIL can

b e used, we relate the ODA to a minimal signature and the CWA to a minimal mo del

based ontheminimal signatureof agiven set offormulasora logic program.

Denition 4 (Op en Domain Assumption,ODA) Let bea signature,

A2 FO RM() a formula, V a variable, :V! TERM()a state (or variable assign-

ment),

G

a ground variable assignment. (This means in TERM() are no variables.)

TheODA canbestated as: if

M

j=

G

(A);forevery state

G

then for every 0

, every state 0

G

:V'!TERM(

0

) and M

(M

0

) is a modelframe

w.r.t. ( 0

)

M

0

j= 0

G (A)

Denition 5 (Closed World Assumption, CWA

ILP

) Letbea minimalgenerating

signatureaccording toa setof formulas X,A is a ground atom. M

min

is the leastmodel

of X. k isthe semanticalentailmentrelation. Then dene

CWA(X):=fA:M

min

kA and A2FO RM()g

[f:A:M 6k A and A2FO RM()g

Inlogic programmingOWA and CWA areused mutually exclusive, [Kowalski,1989].

Kowalski related theCWA to a static representation and the OWA to a dynamic repre-

sentation. Thus,in ILP areCWA

ILP

andOWAmutually exclusive used. Buttoexpress

theOWA weneed moreformal denitions and acloser lo okto three{valuedsemantics.

3.2 A partial Herbrand interpretation

Here, we follow the framework of [Wagner, 1991]. For the signature , the Herbrand

Universe U

consists of all ground terms. We regard non{ground clauses as a dynamic

representationofthecorresp onding set ofground clausesformedbymeansofthecurrent

domain of individual s. Therefore, a state or variable assignment is simply a function

whichassignseachvariableagroundterm. TheHerbrandbase,B

,isthesetofallground

atoms. We usethesamesymb olforsyntacticaland semantical objects.

M = hM +

;M i should characterize a partial Herbrand interpretation in which M +

and M are disjoint subsets of B

. M

+

contains the objects which are true and M

containsthosewhich arefalse. PartialHerbrand interpretationsgiverise toamo del asto

a countermo del relation,=j.

Denition 6 (Satisfaction) Let bea state,F a formula. We writeM j=(F)to say

M satises F in a state .

M j=F i M j=(F) for all states

M j=A i A2M

+

M j=F

1

_:::_F

n

i M j=F

i

for some 1il eqn

M j=F

1

^:::^F

n

i M j=F

i

for all i=1;:::;n

M j=A F

1

^:::^F

n

i A2M +

or F

i

2M for all i=1;:::;n

M j=:F i M =j F

M=j A i A2M

M=j : F i M j=F

M=j F

1

^:::^F

n

i M=j F

i

for all i=1;:::;n

M=j F

1

_:::_F

n

i M=j F

i

for some 1il eqn

WewriteM j=X,whereXisasetofformulas,ifM j=A,foreachA2X. Thesymb ol

k denotesthe semanticalentailment relation. If, forexample, X is aset of formulasand

Aisaformula,thenwewriteXkA,ieverymo delof X ismo delofA,orifM j=X then

M j=A.

Denition 7 (Least Mo del) Let M =hM +

;M i;M 0

=hM 0+

;M 0

i be two modelsfor

a set X of formulas. We say that M is a submo del of M 0

(denoted by M M 0

) i

M +

M

0+

. We saythat M isa leastmodelfor X,if for every M 0

j=X, M M 0

holds.

Note that due to the fact that nothing is said ab out M and M 0

, there can b e

incomparablemo delswithresp ect to. Howeverwehavenowanorderingonmo delsand

interpretations,resp ectively.

Letus brieyrecall someresults of logic programming.

Observation 1 LetM;M 0

betwo interpretationssuch thatM M 0

,X asetofformulas

2. P has a leastmodel

Aprop erpartialHerbrandinterpretationdeterminesathree{valuedassignmentv

m on

groundatoms. Weusev

m

todescrib eOWAandgiveanequivalent denitionofCWA

ILP

regarding thetruth assignment v

m .

Denition 8 (Truth Assignment with O WA ) LetM =hM +

;M ibethepartialHer-

brandinterpretationandAagroundatom. TheOWAisusedithefollowingthree{valued

truth assignment,v

m

,yields:

v

m (A)=

8

>

<

>

:

tr ue if M j=A

fal se if M=j A

unk now n if other w ise

Denition 9 (Truth Assignment with CWA

ILP

) Let M =hM +

;M i be the partial

Herbrand interpretation and A a ground atom. be a minimalgenerating signature ac-

cordingtoasetofformulasX. TheCWA

ILP

isusedithethree{valuedtruthassignment,

v

m

, yields:

v

m (A)=

8

>

<

>

:

tr ue if M j=A andA2FO RM()

fal se if notM j=A and A2FO RM()

unk now n if other w ise

3.3 A semanticof a program P

Wenowgivetwointerpretations: I

CWA andI

Neg

. Therstinterpretationb ehavesexactly

liketheCWA

ILP

combinedwiththeODA,inthatthoseformulaswhicharenotexplicitly

interpretedastrueare(implicitly)false. Thesecondinterpretation,I

Neg

whichcorresp ond

totheOWA,interprets formulasasfalse only if they arestatedexplicitlyasfalse. Hence,

the interpretations are able tohandle formulas which are unknown, namely those which

areneither true norfalse.

Let B

0

b e the Herbrand base of a logical program P FORM(

0

) and B

the

Herbrand base of a minimal generating signature 0

of P. To determine the least

mo del,we intro duce accordingto [Apt,1990]an immediate consequence op eratorT

P (I).

Then, we iteratively apply T

P

(I) toconstruct M +

. The result is a leastxp oint of M +

withresp ect toaminimalgeneratingsignatureofP. The setM isdetermined bythe

Herbrandbase oftheminimal signaturewithout M +

.

Denition 10 (T op erator) The T

P

(I) operator maps one Herbrand interpretation to

another Herbrand interpretation.

A2T

P

(I) i for some substitution

and a clause B B

1

;:::;B

n of P

we have AB and I j=(B

1

;:::;B

n )

IfT

P (I

f )=I

f

holds,I

f

iscalledapre-xp oint ofT. Weknowfromtheresultsoflogic

programmingthat T

P (I

f

) isa mo del of P if I

f

is a pre{xp oint of T

P

. Anotherresult is

thateach monotonicop eratorT hasaleastxp oint whichis alsoits leastpre-xp oint. If

is aminimal generatingsignatureforP,we can constructM byB w ithoutM +

.

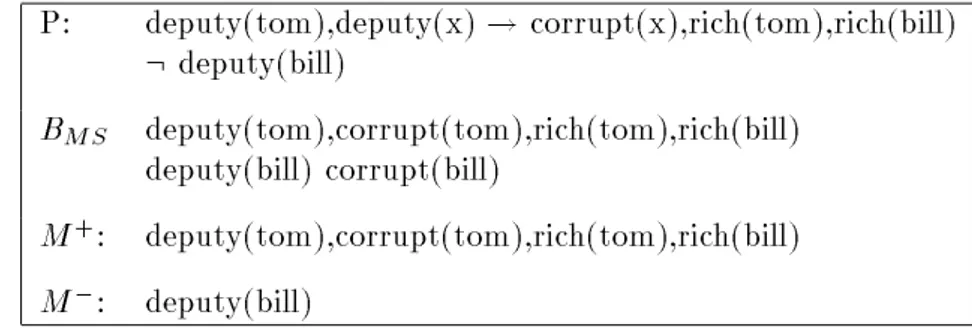

P: deputy(tom),deputy(x)! corrupt(x),rich(tom),rich(bill)

B

MS

deputy(tom),corrupt(tom),rich(tom),rich(bill)

deputy(bill) corrupt(bill)

M +

: deputy(tom),corrupt(tom),rich(tom),rich(bill)

M : deputy(bill) corrupt(bill)

Table5: Example ofI

CWA

Denition 11 (Interpretation I

CWA

) Let P bea programgenerated by a minimalsig-

nature . The interpretation I

CWA

is characterized by hM +

;M i, whereas M +

is a

pre{xpoint of T

P

(I). M isdetermined by B

or formally:

M =fa: a2B

anda62M +

g

Table5 shows aset offormulasP and thecorresp onding HerbrandbaseB

MS . M

+

is

constructedbytheleastmo del of P and M bythedierence ofB

MS

and M +

.

Observation 2 Let P FORM(

0

) be a program and 0

the minimal generating

signature of P. If FORM() = FORM(

0

), then M +

corresponds exactly to the least

model of P.

It is easy to see that the interpretation I

CWA

corresp onds to the CWA

ILP

and the

ODA. Now,let us formulate an interpretation which handles explicit negation regarding

the OWA. The interpretation I

Neg

of a set of formulas with negative examples is con-

structed from the partial Herbrand mo dels. M +

was constructedfrom theleast xp oint

of T

P

(I). Theset M isa leastmo del ofthe negativeexamples.

Denition 12 (Interpretation I

Neg

) The interpretation I

Neg

ischaracterized by

hM +

;M i, whereas M +

is a pre{xpoint of T

P

(I). M is a Herbrand Interpretation of

the negativeexamples, or formally:

M =fa: a2E and ais gr oundg

Clearly, formulas which are neitherin M +

norin M areunknown. We do not have

a normative truth-value assignment like the CWA (those things which are unknown are

false).

Table 6 shows an example of I

Neg

, with a set of clauses, P, and the corresp onding

Herbrand base B

MS . M

+

is constructed from the least mo del of P and M from the

given negativeexamples.

3.4 Valid hypotheses

Wedene asetofhyp othesesHwhichcharacterizestheintendedinterpretationofagiven

P: deputy(tom),deputy(x)! corrupt(x),rich(tom),rich(bill)

: deputy(bill)

B

MS

deputy(tom),corrupt(tom),rich(tom),rich(bill)

deputy(bill)corrupt(bill)

M +

: deputy(tom),corrupt(tom),rich(tom),rich(bill)

M : deputy(bill)

Table6: Example of I

Neg

the T

P

op erator the truth value true or false of the up to now formulas with the truth

value unknown. Formally,the T

P

op eratormapthe truthvalueunknown of anyformulas

totrue orfalse. But rst we dene theset H and in favorof its size twomorerestricted

sets. The interpretationM =hM +

;M i isrelated toI

CWA orI

Neg .

Denition 13 (Set of Hyp otheses H) LetPr e 2FO RM(),whereFO RM()isthe

minimal generatingsignature of the examples, Q2FO RM(). Q may be a conjunction

of atoms.

H=fQ Pr e: for al l ((Q)62M or (Pr e)62M +

)g

Helft's idea, [Helft, 1989], istorestrict this setH bythere is a,such that (Pr e)2

M +

. This ensures that of each hyp othesis b oth, the premise and conclusion, have to

b e once satised. This gives a b etter conrmation, b ecause for example, if we add the

negativeexamplewoman(tom),wedon'thavethehyp othesisdeputy(tom) woman(tom)

in contradiction toH.

Denition 14 (Helft's Set of Hyp otheses, H

Helft )

H

Helft

=fQ Pr e: 8 ((Q)62M or (Pr e)62M +

)and9 (Pr e)2M +

g

A further restriction is to regard only hyp otheses with the same conclusion. This is

called single predicate learning in contrast to multiple predicate learning of the set H,

[Muggleton, 1993].

Denition 15 (Single Predicate Learning, H

SPL )

H

SPL

=fQ Pr e: 8 ((Q)62M or (Pr e)62M +

)

and 9 (Pr e)2M +

and 8i;j Q

i

=Q

j g

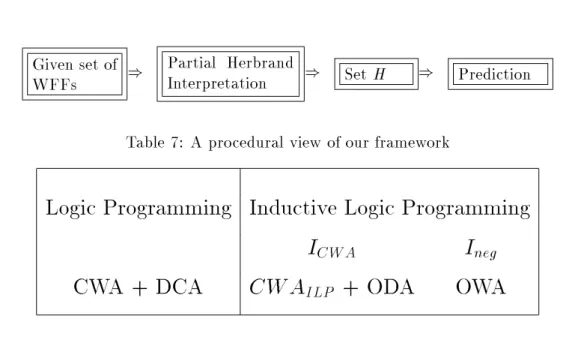

Table7 shows a pro ceduralview of ourframework. We determinefrom agiven set of

formulasthe partialHerbrand interpretation. Weconstructthen aset H and trytomap

thetruthvalueunknowntotrueorfalsewiththeT

P

op erator. Hence,wehavedetermined

Givensetof

WFFs

)

Partial Herbrand

Interpretation

)

SetH )

Prediction

Table 7: A pro cedural view ofourframework

Logic Programming Inductive Logic Programming

I

CWA

I

neg

CWA + DCA CWA

ILP

+ ODA OWA

Table8: Summaryof theassumptions

3.5 Summary

WehaverelatedtheOWAtotheinterpretationI

neg

andthecombinationofCWA

ILP

andODAtotheinterpretationI

CWA

,table8. Thesetofhyp othesesisdeterminedregard-

ing the interpretation and is a set of all p ossible hyp otheses. Several learning algorithm

restrictsthis set,forexample FOIL withsinglepredicatelearning uses only asubsetwith

always the same conclusion in contrast to multiple predicate learning. However, in the

next sectionwe p oint outseveral relations which canb e discarded usingthis framework.

4 Relations

4.1 Helft: induction as nonmontonic inference

In the following we restrict the framework of [Helft,1989] to horn clause logic. It is

easy to see that the minimal mo dels coincide with the least xp oint under a Herbrand{

Interpretation.

Denition 16 (Helft's language) Helft uses a language which is restricted by the fol-

lowing items:

1. Groundable: Functionsymbols are not used and every variable in the head of the

clausehas toappearin thebody. Thisensures thata niteleastmodelalways exists.

2. Injective: Thereisasubstitutionorstate ,mappingtheliteralsofPontoelements

of A, such that for every pair of variables x;y of P, (x)6=(y). This ensures that

the set of generalizationsis niteandno unnecessaryvariables areintroduced.

Helftconstructsaminimalmo delwithresp ecttoasetofformulas. Inourterminology,

M +

corresp ondstotheleastmo delandM istheHerbrandbasewithout theleastmo del

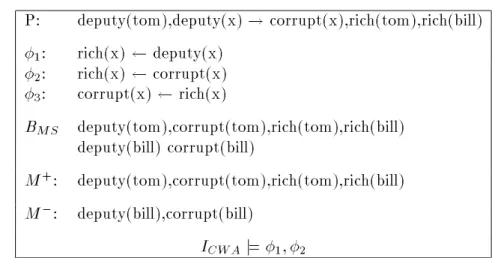

P: deputy(tom),deputy(x)! corrupt(x),rich(tom),rich(bill)

1

: rich(x) deputy(x)

2

: rich(x) corrupt(x)

3

: corrupt(x) rich(x)

B

MS

deputy(tom),corrupt(tom),rich(tom),rich(bill)

deputy(bill)corrupt(bill)

M +

: deputy(tom),corrupt(tom),rich(tom),rich(bill)

M : deputy(bill),corrupt(bill)

I

CWA j=

1

;

2

Table 9: Example of Helft

we always have an unique minimal mo del. Helft has to distinguish b etween strong and

weak generalizations.

Denition 17 ( ) Let M be an interpretation and =P Q a clause. is a nite

setof groundable clausesand is the setof generalizationswhich aredened as:

M j=and M j=

Q, whereas

(Q)isone groundinstanceandQis injective over

M.

6k

Forevery clause 0

of (),if 0

k thenk 0

Theorem1 I

CWA ()j=

Pro of

Assume that I

CWA

()6j= P Q and P Q 2 . Without loss of generality assume

that,therule is ground. Note thatQ can b ea conjunctionof atoms.

We know from the denition of satisability that P 62 M +

and that Q 62 M . We also

know, that P and Q are in the minimal signature . Therefore P 2 M and Q 2 M +

b ecause allmemb ersof B

P

arebydenitionin M +

orin M .

In words of [Helft,1989], M 6j= P Q. This contradicts the rst condition of Helft's

denition ThereforeP Q isnotin and this concludesour pro of. 2

Table 9 shows an example of Helft, in which each prop osedgeneralization is satised

by I

CWA ().

4.2 FOIL in a logical framework

Weshow,inthissection,thatFOIL 3

isanexampleforalearningsystembasedonPlotkin's

conditions. Inanother view, FOIL isan example of Helft's framework. Both t into our

3

Input of FOIL p: bill,tom,hank,jo e,p ete,carl,go.

rich(p) bill,+ tom,+.

*corrupt(p) tom,+.

*deputy(p) tom,+;bill,- .

Outputof FOIL: ***Warning: thefollowing denition

***do esnotcover1 tuplein therelation

rich(A) :-*corrupt(A)

M +

: deputy(tom),corrupt(tom),rich(tom),rich(bill)

M : deputy(bill)

Table 10: Example ofFOIL

logical framework. We do not want to investigate the information{based selection of a

certaingeneralizationoutof thehyp othesis set. Wedo notregardanythinglike predicate

invention inthisframework. We alsodonotallowrules withnegativeliterals intheb o dy.

FOILisalearningsystemwhichinfersfromatrainingsetofexamplesaformulawhich

describ es theselected concept. The examples aregiven by groundatomsand aredivided

intop ositive examplesand backgroundknowledge. FOIL selects theb esthyp othesis with

an information based measure that covers as much as p ossible of the p ositive examples

andnonegativeexample. FOILworksintwomo des. Inonemo de,onlyp ositiveexamples

aregiven, and theCWAis usedtoconstructnegative examples. Thiscorresp onds toour

interpretationI

CWA

. The othermo de in which p ositive and negativeexamples aregiven

corresp ondstoourinterpretationI

Neg

. Ourobservationthateachhyp othesisisamemb er

of ourhyp othesesset H is indep endent of thechosen mo del.

Table 10 shows FOIL applied to theexample of Helft. The rule is valid b ecause the

conditionsofPlotkin arefullled. Theonlyexceptionisthatr ich(bil l )cannotb ededuced

from thehyp othesis andthebackground knowledge.

Theorem2 Each FOILlearning resultis a member of H

SPL .

Pro of:

This is an easy pro of b ecause H

SPL

is exactly constructed to fulll the conditions of

Plotkin and Helft. First, we show this for thecase where we give explicitly p ositive and

negative examples, and second, where FOIL uses the CWA. P Q is a FOIL learning

result.

1. Here theconditions ofPlotkin are fullled. By denitionof thesetH

SPL

,weknow

that P 62M orQ62M +

. Then we see thatP also hastob e in M +

,b ecause Q2

M +

,and wewanttodeduce P fromthe hyp othesis. Theconsistency isguaranteed

bythedisjointnessof M +

and M .

2. Inthiscase,weknowthatallp ositiveexamplesareinM +

andif wewant todeduce

the p ositive examples, P also has tob e in M +

. To deduce the p ositive examples,

we also need to satisfy the background knowledge. This means that for instance

+

Following the framework of [Plotkin,1971], the inductive learning task can b e describ ed

using a backgroundtheory B, a set of p ositive examples E +

,a set of negative examples

E ,ahyp othesis H,and a partial orderingrelation . Theconditions are:

1. The backgroundtheoryshould notentail thep ositive examples,

B 6k E +

(Priornecessity),

2. anditshould b econsistentwiththeexamples, B;E ;E +

6k?(Priorsatisability).

3. The backgroundtheoryand thehyp othesis have toentail the p ositive examples,

B;HkE +

(Posteriorsuciency).

4. Thebackgroundtheoryandthehyp othesis shouldnotentailthenegativeexamples,

B;H ;E 6k ?(PosteriorSatisability).

5. We should use the most sp ecic hyp othesis that fullls the ab ove requirements.

Thereforewe have an ordering on the hyp otheses, whereash1 h2 means h1 is

moregeneralthan h2

Plotkin hasproven ingeneral, thereis nohyp othesis which coversexactlythep ositive

examples and nothing else. Ingeneral, we can deduce from a hyp othesis facts which are

notknown. Wecallthissetoffactsprediction. ToseethatthesetH fullls theconditions

of Plotkin,wetesteach condition:

1. The background theoryshould notentail the p ositive examples. Thiscan b eguar-

anteedbytherestrictionthat backgroundtheory andtheexamples aredisjointed.

2. Thebackgroundknowledge andtheexamplesshould b econsistent issatised bythe

restrictionthat M +

and M aredisjointed.

3. The condition B;H j= E +

guarantees that we can conclude the p ositive examples

fromthehyp othesisandthebackgroundknowledge. Thismeans,iftheb o dyofeach

hyp othesis isin M +

,wecan deduce thehead. ThereforeQ2M +

.

4. The consistency issatised bytherestrictionthat M +

and M aredisjoint.

4.4 Comparison with other three{valued logics

Usingourframeworktheunderlying logicofILPcanb ecomparedwithotherthree{valued

logics fairly easy. We will compare our three{valued logic with the logics of Kleene and

L ukasiewicz, [Turner,1984], whereaswe only regardtheimplication.

First let us lo ok on Kleene's strong three{valued implication. Kleene's concern was

to mo del mathematical statements which that means his undecidable third truth value

represents neither true nor false, but rather a state of partial ignorance [Turner,1984].

We guess,thatthisiscommontoourapproach,wherethethirdtruthvaluealsoindicates

that we do not knowanything. Nevertheless,we have avery optimistic viewin learning,

Logic of ILP:

Pre! Q t f u

t t f t

f t t t

u t t t

Kleene'slogic:

Pre! Q t f u

t t f u

f t t t

u t u u

L ukasiewicz'slogic:

Pre! Q t f u

t t f i

f t t t

i t i t

Table 11: Comparisonof logics

did not want to conclude from an unknown sentence somethingknown. Sp ecially in the

case whereu! uisundecided.

For a second comparison let us lo ok of Lukasiewicz three{valued logic. In this logic

the case u ! u is true. Turner p oints out that the dierence origin from the dierent

interpretationofththird truthvalue. Whereasin contextofKleenethisthird truthvalue

is atruth gap, L ukasiewicz assigned the third truthvalue an statement,if no assignment

of trueorfalse is p ossible. Howeverhedeals with statementsab out thefuture.

Againhavewethemostoptimisticviewoftheimplication. Thismeansifitisp ossible

that a fact implies another fact, this statement is true. The other semantics are more

cautious,b ecause obviouslythis can b efalse.

5 Conclusions and further works

In this work, we have compared the basic assumptions of logic programming and ILP.

Then, we have given a three{valued mo del{based semantic of ILP and shown that FOIL

tsin this framework. The semantic reectsthetwomain assumptions which we need in

machine learning. Finally we have related our framework with other works of ILP and

three{valued logics.

Currently weare implementing ourframeworkwithISABELLE, [Paulson,1989],and

doing exp eriments. We arealso evaluatingmorerestrictions on theset H.

In further works we want to show the need to handle more than three truth{values

in machinelearning. Forexample,wewill showthatit isuseful tohandle inconsistencies

in an incremental learning system. We often come up with inconsistencies, and we want

to wait for a less busy time tob e able to make revisions b ecause making revisions is an

exp ensive task.

Acknowledgments

We would like to thank Katharina Morikand the memb ersof Computer Science VI I I of

theUniversityDortmund. This work waspartly supp orted bytheEurop ean Community

[Apt,1990] Apt,K. (1990). Logicprogramming. InvanLeeuwen,J.,editor, Handbookof

theoreticalComputer Science,Volume B. Elsevier.

[Apt and van Emden,1982] Apt, K. and van Emden, M. (1982). Contributions to the

theory oflogic programming. ACM,29(3).

[Clark,1978] Clark, K. (1978). Negation as failure. In Gallaire, H. Minker, J., editor,

Logic and Data Bases,pages 293{322.Plenum Press,New York.

[DeRaedt and Bruyno oghe,1989] DeRaedt, L. and Bruyno oghe, M. (1989). Towards

friendly concept-learners. In Proc. of the 11th Int. Joint Conf. on Artif. Intelligence,

pages 849{ 854,LosAltos, CA.Morgan Kaufman.

[Fitting,1985] Fitting,M. (1985). AKripke-kleene semantics forgeneral logic programs.

Logic Programming,2:295{312.

[Helft,1989] Helft,N.(1989).Induction asnonmonotonicinference. InProceedingsof the

1st InternationalConferenceonKnowledge Representation andReasoning.

[Kietz and Wrob el,1991] Kietz, J.-U. and Wrob el, S.(1991). Controlling thecomplexity

oflearninginlogicthroughsyntacticandtask-orientedmo dels.InMuggleton,S.,editor,

Inductive Logic Programming, chapter 16, pages 335 { 360. Academic Press, London.

Also available asArb eitspapiere derGMDNo.503,1991.

[Kowalski,1979] Kowalski, R. (1979). Logic for Problem Solving. Elsevier Science Pub-

lishing Co.Inc.

[Kowalski,1989] Kowalski, R.(1989). Logic for data description. In Mylop oulos, J.and

Bro die, M., editors, Readings in Articial Intelligence and Databases. Morgan Kauf-

mann.

[Lloyd, 1987] Lloyd, J. (1987). Foundations of Logic Programming. Springer Verlag,

Berlin, NewYork, 2ndedition.

[Muggleton, 1990] Muggleton,S.(1990). Inductive logic programming. In Proceedingsof

the 1th conferenceon Algorithmic Learning Theory.

[Muggleton, 1993] Muggleton,S.(1993). Inductive logic programming: Derivations, suc-

cesses and shortcommings. In Brazdil, P., editor, ECML-93, European Conferenceon

Machine Learning.

[Muggletonand Feng,1992] Muggleton, S. and Feng, C. (1992). Ecient induction of

logic programs. In Muggleton, S., editor, Inductive Logic Programming, chapter 13,

pages 281{298.Academic Press, London.

[Paulson, 1989] Paulson, J. (1989). The foundation of a generic theorem prover. Auto-

[Plotkin,1971] Plotkin, G. D. (1971). A further note on inductive generalization. In

Meltzer, B. and Michie, D., editors, Machine Intelligence, chapter 8, pages 101{124.

American Elsevier.

[Quinlan, 1990] Quinlan, J. (1990). Learning logical denitions from relations. Machine

Learning,5(3):239{ 266.

[Reiter,1978] Reiter, R. (1978). On closed world data bases. In Gallaire, H.Minker, J.,

editor, Logic and Data Bases.Plenum Press,New York.

[Shepherdson, 1987] Shepherdson, J.(1987). Negation in logic programming. In Minker,

J.,editor, Foundationsof Deductive Databasesand LogicProgramming.MorganKauf-

mann Publisher Inc.

[Turner,1984] Turner,R.(1984).LogicsforArticialIntelligence.EllisHorwo o dlimited.

[Wagner,1991] Wagner,G.(1991). Logic programmingwithstrongnegationandinexact

predicates. Logic and Computation,1(6):835{859.