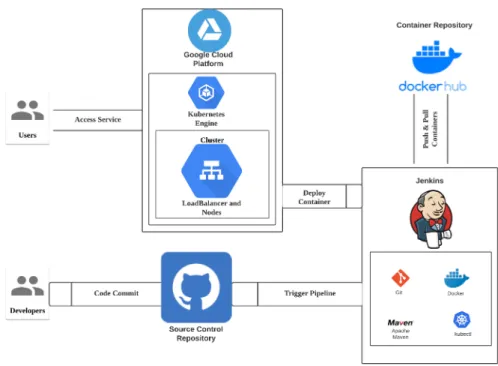

Containerization of a Web Application Using Docker and Container Orchestration using Kubernetes

Volltext

Abbildung

![Figure 2: Kubernetes Architecture [8]](https://thumb-eu.123doks.com/thumbv2/1library_info/4258275.1565037/5.892.238.653.195.535/figure-kubernetes-architecture.webp)

ÄHNLICHE DOKUMENTE

Durch Docker haben sich Linux-Container zu einer wahrhaft umwälzenden Technologie gemausert, die das Zeug hat, die IT-Landschaft sowie die zugehörigen Ökosysteme und Märkte

Durch Docker haben sich Linux-Container zu einer wahrhaft umwälzenden Technologie gemausert, die das Zeug hat, die IT-Landschaft sowie die zugehörigen Ökosysteme und Märkte

Durch Docker haben sich Linux-Container zu einer wahrhaft umwälzenden Technologie gemausert, die das Zeug hat, die IT-Landschaft sowie die zugehörigen Ökosysteme und Märkte

Durch Docker haben sich Linux-Container zu einer wahrhaft umwälzenden Technologie gemausert, die das Zeug hat, die IT-Landschaft sowie die zugehörigen Ökosysteme und Märkte

Durch Docker haben sich Linux-Container zu einer wahrhaft umwälzenden Technologie gemausert, die das Zeug hat, die IT-Landschaft sowie die zugehörigen Ökosysteme und Märkte

Durch Docker haben sich Linux-Container zu einer wahrhaft umwälzenden Technologie gemausert, die das Zeug hat, die IT-Landschaft sowie die zugehörigen Ökosysteme und Märkte

Durch Docker haben sich Linux-Container zu einer wahrhaft umwälzenden Technologie gemausert, die das Zeug hat, die IT-Landschaft sowie die zugehörigen Ökosysteme und Märkte

Durch Docker haben sich Linux-Container zu einer wahrhaft umwälzenden Technologie gemausert, die das Zeug hat, die IT-Landschaft sowie die zugehörigen Ökosysteme und Märkte