The JML and JUnit Way of Unit Testing and its Implementation

Yoonsik Cheon and Gary T. Leavens TR #04-02

February 2004

Keywords: Unit testing, automatic test oracle generation, testing tools, runtime assertion checking, formal methods, programming by contract, JML language, JUnit testing framework, Java language.

2000 CR Categories: D.2.1 [Software Engineering ] Requirements/ Specifications — languages, tools, JML; D.2.2 [Software Engineering] Design Tools and Techniques — computer-aided soft- ware engineering (CASE); D.2.4 [Software Engineering ] Software/Program Verification — Assertion checkers, class invariants, formal methods, programming by contract, reliability, tools, validation, JML; D.2.5 [Software Engineering] Testing and Debugging — Debugging aids, design, monitors, test- ing tools, theory, JUnit; D.3.2 [Programming Languages] Language Classifications — Object-oriented languages; F.3.1 [Logics and Meanings of Programs] Specifying and Verifying and Reasoning about Programs — Assertions, invariants, pre- and post-conditions, specification techniques.

Submitted for publication

An earlier version of this paper appeared in the European Conference on Object-Oriented Pro- gramming (ECOOP), M´alaga, Spain, June 10-14, 2002. Lecture Notes in Computer Science, pp.

231-255, Springer-Veralg. That version was copyright Springer-Verlag, 2002.

Department of Computer Science

226 Atanasoff Hall

Iowa State University

Ames, Iowa 50011-1041, USA

The JML and JUnit Way of Unit Testing and its Implementation

Yoonsik Cheon

∗The University of Texas at El Paso Gary T. Leavens

Iowa State University February 18, 2004

Abstract

Writing unit test code is labor-intensive, hence it is often not done as an integral part of programming. However, unit testing is a practical approach to increasing the correctness and quality of software; for example, Extreme Programming relies on frequent unit testing.

In this paper we present a new approach that makes writing unit tests easier. It uses a formal specification language’s runtime assertion checker to decide whether methods are work- ing correctly; thus code to decide whether tests pass or fail is automatically produced from specifications. Our tool combines this testing code with hand-written test data to execute tests.

Therefore, instead of writing testing code, the programmer writes formal specifications (e.g., pre- and postconditions). This makes the programmer’s task easier, because specifications are more concise and abstract than the equivalent test code, and hence more readable and maintainable.

Furthermore, by using specifications in testing, specification errors are quickly discovered, so the specifications are more likely to provide useful documentation and inputs to other tools. In this paper we describe an implementation using the Java Modeling Language (JML) and the JUnit testing framework, but the approach could be easily implemented with other combinations of formal specification languages and unit testing tools.

1 Introduction

Program testing is an effective and practical way of improving the correctness of software, and thereby improving software quality. It has many benefits when compared to more rigorous meth- ods like formal reasoning and proof, such as simplicity, practicality, cost effectiveness, immediate feedback, understandability, and so on. There is a growing interest in applying program testing to the development process, as reflected by the Extreme Programming (XP) approach [6]. In XP, unit tests are viewed as an integral part of programming. Tests are created before, during, and after the code is written — often emphasized as “code a little, test a little, code a little, and test a little ...”

[7]. The philosophy behind this is to use regression tests [33] as a practical means of supporting refactoring.

∗An earlier version of this work, with a different implementation, was described in ourECOOP 2002 paper [15].

The work of both authors was supported in part by a grant from Electronics and Telecommunications Research Institute (ETRI) of South Korea, and by grants CCR-0097907 and CCR-0113181 from the US National Science Foundation.

Authors’ addresses: Department of Computer Science, The University of Texas at El Paso, 500 W. University Ave., El Paso, Texas 79902; email:cheon@cs.utep.edu; Department of Computer Science, Iowa State University, 226 Atanasoff Hall, Ames, Iowa 50011; email: leavens@cs.iastate.edu.

1.1 The Problem

However, writing unit tests is a laborious, tedious, cumbersome, and often difficult task. If the testing code is written at a low level of abstraction, it may be tedious and time-consuming to change it to match changes in the code. One problem is that there may simply be a lot of testing code that has to be examined and revised. Another problem occurs if the testing program refers to details of the representation of an abstract data type; in this case, changing the representation may require changing the testing program.

To avoid these problems, one should automate more of the writing of unit test code. The goal is to make writing testing code easier and more maintainable.

One way to do this is to use a framework that automates some of the details of running tests.

An example of such a framework is JUnit [7]. It is a simple yet practical testing framework for Java classes; it encourages the close integration of testing with development by allowing a test suite be built incrementally.

However, even with tools like JUnit, writing unit tests often requires a great deal of effort. Sep- arate testing code must be written and maintained in synchrony with the code under development, because the test class must inherit from the JUnit framework. This test class must be reviewed when the code under test changes, and, if necessary, also revised to reflect the changes. In addition, the test class suffers from the problems described above. The difficulty and expense of writing the test class are exacerbated during development, when the code being tested changes frequently. As a consequence, during development there is pressure to not write testing code and to not test as frequently as might be optimal.

We encountered these problems ourselves in writing Java code. The code we have been writing is part of a tool suite for the Java Modeling Language (JML) [10]. JML is a behavioral interface specification language for Java [37, 36]. In our implementation of these tools, we have been formally documenting the behavior of some of our implementation classes in JML. This enabled us to use JML’s runtime assertion checker to help debug our code [9, 13, 14]. In addition, we have been using JUnit as our testing framework. We soon realized that we spent too much time writing test classes and maintaining them. In particular we had to write many query methods to determine test success or failure. We often also had to write code to build expected results for test cases. We also found that refactoring made testing painful; we had to change the test classes to reflect changes in the refactored code. Changing the representation data structures for classes also required us to rewrite code that calculated expected results for test cases.

While writing unit test methods, we soon realized that most often we were translating method pre- and postconditions into the code in corresponding testing methods. The preconditions became the criteria for selecting test inputs, and the postconditions provided the properties to check for test results. That is, we turned the postconditions of methods into code for test oracles. A test oracle determines whether or not the results of a test execution are correct [44, 47, 50]. Developing test oracles from postconditions helped avoid dependence of the testing code on the representation data structures, but still required us to write many query methods. In addition, there was no direct connection between the specifications and the test oracles, hence they could easily become inconsistent.

These problems led us to think about ways of testing code that would save us time and effort.

We also wanted to have less duplication of effort between the specifications we were writing and the testing code. Finally, we wanted the process to help keep specifications, code, and tests consistent with each other.

1.2 Our Approach

In this paper, we propose a solution to these problems. We describe a simple and effective technique

that automates the generation of oracles for testing classes. The conventional way of implementing a

test oracle is to compare the test output to some pre-calculated, presumably correct, output [26, 42].

We take a different perspective. Instead of building expected outputs and comparing them to the test outputs, we monitor the specified behavior of the method being tested to decide whether the test passed or failed. This monitoring is done using the formal specification language’s runtime assertion checker. We also show how the user can combine hand-written test inputs with these test oracles.

Our approach thus combines formal specifications (such as JML) and a unit testing framework (such as JUnit).

Formal interface specifications include class invariants and pre- and postconditions. We assume that these specifications are fairly complete descriptions of the desired behavior. Although the testing process will encourage the user to write better preconditions, the quality of the generated test oracles will depend on the quality of the specification’s postconditions. The quality of these postconditions is the user’s responsibility, just as the quality of hand-written test oracles would be.

We wrote a tool, jmlunit, to automatically generate JUnit test classes from JML specifications.

The generated test classes send messages to objects of the Java classes under test; they catch assertion violation exceptions from test cases that pass an initial precondition check. Such assertion violation exceptions are used to decide if the code failed to meet its specification, and hence that the test failed. If the class under test satisfies its interface specification for some particular input values, no such exceptions will be thrown, and that particular test execution succeeds. So the automatically generated test code serves as a test oracle whose behavior is derived from the specified behavior of the target class. (There is one complication which is explained in Section 4.) The user is still responsible for generating test data; however the generated test classes make it easy for the user to add test data.

1.3 Outline

The remainder of this paper is organized as follows. In Section 2 we describe the capabilities our approach assumes from a formal interface specification language and its runtime assertion checker, using JML as an example. In Section 3 we describe the capabilities our approach assumes from a testing framework, using JUnit as an example. In Section 4 we explain our approach in detail; we discuss design issues such as how to decide whether tests fail or not, test fixture setup, and explain the automatic generation of test methods and test classes. In Section 5 we discuss how the user can add test data by hand to the automatically generated test classes. In Section 6 we discuss other issues. In Section 7 we describe related work and we conclude, in Section 8, with a description of our experience, future plans, and the contributions of our work.

2 Assumptions About the Formal Specification Language

Our approach assumes that the formal specification language specifies the interface (i.e., names and types) and behavior (i.e., functionality) of classes and methods. We assume that the language has a way to express class invariants and method specifications consisting of pre- and postconditions.

Our approach can also handle specification of some more advanced features. One such feature is an intra-condition, usually written as an assert statement. Another is a distinction between normal and exceptional postconditions. A normal postcondition describes the behavior of a method when it returns without throwing an exception; an exceptional postcondition describes the behavior of a method when it throws an exception.

The Java Modeling Language (JML) [36, 37] is an example of such a formal specification lan- guage. JML specifications are tailored to Java, and its assertions are written in a superset of Java’s expression language.

Figure 1 shows an example JML specification. As shown, a JML specification is commonly written as annotation comments in a Java source code file. Annotation comments start with //@

or are enclosed in /*@ and @*/. Within the latter kind of comment, at-signs (@) on the beginning

public class Person {

private /*@ spec_public @*/ String name;

private /*@ spec_public @*/ int weight;

//@ public invariant name != null && name.length() > 0 && weight >= 0;

/*@ public behavior

@ requires n != null && name.length() > 0;

@ assignable name, weight;

@ ensures n.equals(name) && weight == 0;

@ signals (Exception e) false;

@*/

public Person(String n) { name = n; weight = 0; } /*@ public behavior

@ assignable weight;

@ ensures kgs >= 0 && weight == \old(weight + kgs);

@ signals (IllegalArgumentException e) kgs < 0;

@*/

public void addKgs(int kgs) { weight += kgs; } /*@ public behavior

@ ensures \result == weight;

@ signals (Exception e) false;

@*/

public /*@ pure @*/ int getWeight() { return weight; } /* ... */

}

Figure 1: An example JML specification. The implementation of the method addKgs contains an error to be revealed in Section 5.3. This error was overlooked in our initial version of this paper, and so is an example of a “real” error.

of lines are ignored. The spec public annotation lets non-public declarations such as private fields name and weight be considered to be public for specification purposes

1. The fourth line of the figure gives an example of an invariant, which should be true in each publicly-visible state.

In JML, method specifications precede the corresponding method declarations. Method pre- conditions start with the keyword requires, frame axioms start with the keyword assignable, normal postconditions start with the keyword ensures, and exceptional postconditions start with the keyword signals [28, 36, 37]. The semantics of such a JML specification states that a method’s precondition must hold before the method is called. When the precondition holds, the method must terminate and when it does, the appropriate postconditions must hold. If it returns normally, then its normal postcondition must hold in the post-state (i.e., the state just after the body’s execution), but if it throws an exception, then the appropriate exceptional postcondition must hold in the post- state. For example, the constructor must return normally when called with a non-null, non-empty string n. It cannot throw an exception because the corresponding exceptional postcondition is false.

JML has lots of syntactic sugar that can be used to highlight various properties for the reader

1As in Java, a field specification can have an access modifier determining its visibility. If not specified, the visibility defaults to the visibility of the Java declaration; i.e., without thespec publicannotations, bothnameand weight could be used only in private specifications.

and to make specifications more concise. For example, one can omit the requires clause if the precondition is true, as in the specification of addKgs. However, we will not discuss these sugars in detail here.

JML follows Eiffel [39, 40] in having special syntax, written \old(E) to refer to the pre-state value of the expression E, i.e., the value of E just before execution of the body of the method. This is often used in situations like that shown in the normal postcondition of addKgs.

For a non-void method, such as getWeight, \result can be used in the normal postcondition to refer to the return value. The method getWeight is specified to be pure, which means that its execution cannot have any side effects. In JML, only pure methods can be used in assertions.

In addition to pre- and postconditions, one can also specify intra-conditions with specification statements such as the assert statement.

2.1 The Runtime Assertion Checker

The basic task of the runtime assertion checker is to execute code in a way that is transparent, unless an assertion is violated. That is, if a method is called and no assertion violations occur, then, except for performance measures (time and space) the behavior of the method is unchanged. In particular, this implies that, as in JML, assertions can be executed without side effects.

We do not assume that the runtime assertion checker can execute all assertions in the specification language. However, only the assertions that it can execute are of interest in this paper.

We assume that the runtime assertion checker has a way of signaling assertion violations to the callers of a method. In practice this is most conveniently done by using exceptions. While any systematic mechanism for indicating assertion violations would do, to avoid circumlocutions, we will assume that exceptions are used in the remainder of this paper.

The runtime assertion checker must have some exceptions that it can use without interference from user programs. These exceptions are thus reserved for use by the runtime assertion checker. We call such exceptions assertion violation exceptions. It is convenient to assume that all such assertion violation exceptions are subtypes of a single assertion violation exception type.

JML’s runtime assertion checker can execute a constructive subset of JML assertions, including some forms of quantifiers [9, 13, 14]. In functionality, it is similar to other design by contract tools [34, 39, 40, 48]; such tools could also be used with our approach.

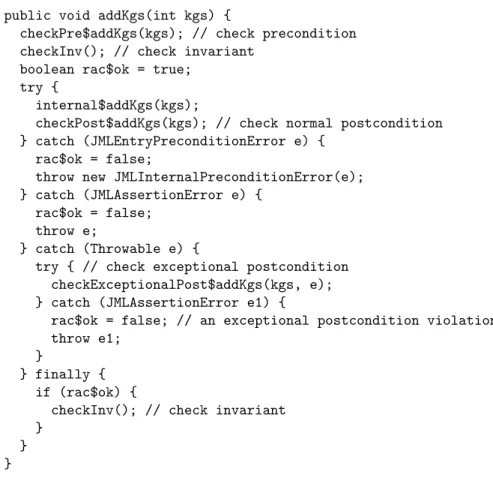

To explain how JML’s runtime assertion checker monitors Java code for assertion violations, it is necessary to explain the structure of the instrumented code compiled by the JML compiler. Each Java class and method with associated JML specifications is instrumented, as shown by example in Figure 2. The original method becomes a private method, e.g., addKgs becomes internal$addKgs.

The checker generates a new method, e.g., addKgs, to replace it, which calls the original method, internal$addKgs, inside a try statement.

The generated method first checks the method’s precondition and class invariant, if any.

2If these assertions are not satisfied, this check throws either a JMLEntryPreconditionError or a JMLInvariantError. After the original method is executed in the try block, the normal postcon- dition is checked, or, if exceptions were thrown, the exceptional postcondition is checked in the third catch block. To make assertion checking transparent, the code that checks the exceptional postcondition re-throws the original exception if the exceptional postcondition is satisfied; other- wise, it throws a JMLNormalPostconditionError or JMLExceptionalPostconditionError. In the finally block, the class invariant is checked again. The purpose of the first catch block is explained below (see Section 4.1).

Our approach assumes that the runtime assertion checker can distinguish two kinds of precon- dition violations: entry precondition violations and internal precondition violations. The former

2To handle old expressions (as used in the postcondition of addKgs), the instrumented code evaluates each old expression occurring in the postconditions from within thecheckPre$addKgsmethod, and binds the resulting value to a private field of the class. The corresponding private field is used when checking postconditions.

public void addKgs(int kgs) {

checkPre$addKgs(kgs); // check precondition checkInv(); // check invariant

boolean rac$ok = true;

try {

internal$addKgs(kgs);

checkPost$addKgs(kgs); // check normal postcondition } catch (JMLEntryPreconditionError e) {

rac$ok = false;

throw new JMLInternalPreconditionError(e);

} catch (JMLAssertionError e) { rac$ok = false;

throw e;

} catch (Throwable e) {

try { // check exceptional postcondition checkExceptionalPost$addKgs(kgs, e);

} catch (JMLAssertionError e1) {

rac$ok = false; // an exceptional postcondition violation throw e1;

}

} finally { if (rac$ok) {

checkInv(); // check invariant }

} }

Figure 2: The top-level of the run-time assertion checker’s translation of the addKgs method in class Person. (Some details have been suppressed.)

refers to violations of preconditions of the method being tested. The latter refers to precondition violations that arise during the execution of the body of the method under test (refer to Section 4.1 for a detailed explanation). Other distinctions among assertion violations are useful in reporting errors to the user, but are not important for our approach.

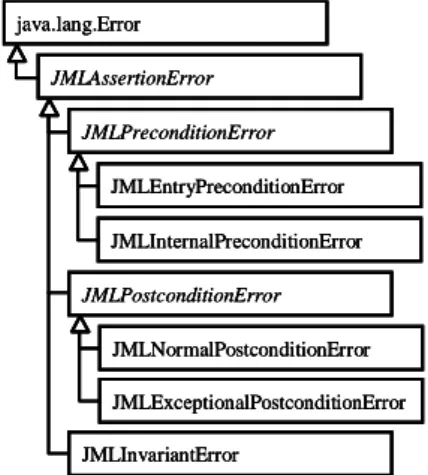

In JML the assertion violation exceptions are organized into an exception hierarchy as shown in Figure 3. The abstract class JMLAssertionError is the ultimate superclass of all assertion violation exceptions. It has several subclasses that correspond to different kinds of assertion vio- lations, such as precondition violations, postcondition violations, invariant violations, and so on.

Our assumed entry precondition and internal precondition violations are realized by the types JMLEntryPreconditionError and JMLInternalPreconditionError. Both are concrete subclasses of the abstract class JMLPreconditionError.

3 Assumptions About the Testing Framework

Our approach assumes that separate tests are to be run for each method of each class being tested.

We assume that the framework provides test objects, which are objects with at least one method

that can be called to execute a test. We call such methods of a test object test methods. We also

assume that one can make composites of test objects, that is, that one can group several test objects

into another test object, which, following JUnit’s terminology, we will call a test suite.

JMLExceptionalPostconditionError JMLNormalPostconditionError JMLPostconditionError JMLPreconditionError

JMLEntryPreconditionError JMLInternalPreconditionError

JMLInvariantError java.lang.Error

JMLAssertionError

JMLExceptionalPostconditionError JMLExceptionalPostconditionError JMLNormalPostconditionError JMLNormalPostconditionError JMLPostconditionError JMLPostconditionError JMLPreconditionError JMLPreconditionError

JMLEntryPreconditionError JMLEntryPreconditionError JMLInternalPreconditionError JMLInternalPreconditionError

JMLInvariantError JMLInvariantError java.lang.Error java.lang.Error

JMLAssertionError JMLAssertionError

Figure 3: A part of the exception hierarchy for JML runtime assertion violations.

We further assume that a test method can indicate to the framework whether each test case fails, succeeds, or is meaningless. Following JUnit, a test failure means that a bug or other problem was found, success means that the code was not found to be incorrect. The outcome of a test is said to be meaningless or rejected if an entry precondition violation occurs for the test case. Such a test case is inappropriate to test the method, as it is outside the domain of the method under test;

details are Section 5.3.

By assuming that the framework provides test objects, which can execute a test, we are also assuming that there is a way to provide test data to test objects.

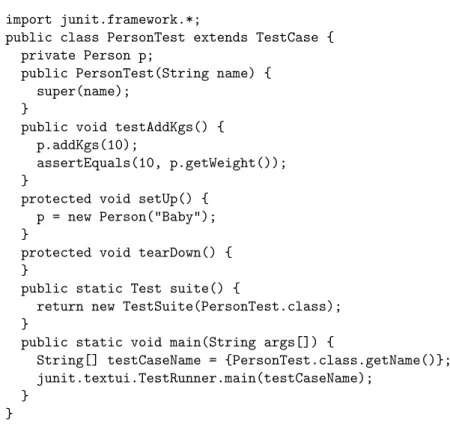

JUnit is a simple, useful testing framework for Java [7, 30]. In the simplest way to use JUnit, a test object is an object of a subclass of the framework class TestCase that is written with several test methods. By convention, each test method has a name beginning with “test”, returns nothing and takes no arguments. When this is done, the framework can then use Java’s introspection API to find all such test methods, and can run them when requested.

Figure 4 is a sample JUnit test class, which is designed to test the class Person. A subclass of TestCase, such as PersonTest, functions both as a class describing a test object and as one describing a test suite. The methods inherited from TestCase provides basic facilities for asserting test success or failure and dealing with global test state. TestCase also provides facilities for its subclasses to act as composites, since it allows them to contain a number of test methods and aggregate the test objects formed from them into a test suite.

In direct use of JUnit, one uses methods like assertEquals, defined in the framework, to write test methods, as in the test method testAddKgs. Such methods indicate test success or failure to the framework. For example, when the arguments to assertEquals are not equal, the test fails.

Another such framework method is fail, which directly indicates test failure. JUnit assumes that a test succeeds unless the test method throws an exception or indicates test failure. Thus the only way a test method can indicate success is to return normally.

JUnit thus does not provide a way to indicate that a test execution was meaningless. This is because it is geared toward counting executions of test methods instead of test cases, and because hand-written tests are assumed to be meaningful. However, in our approach we need to extend JUnit to allow the counting of test case executions and to track which test cases were meaningful.

We extended the JUnit framework to do this by writing a class JMLTestRunner, which tracks the meaningful test cases executed.

JUnit provides two methods to manipulate the global data or state associated with running

tests: the setUp method creates objects and does any other tasks needed to run a test, and the

tearDown method undoes otherwise permanent side-effects of tests. For example, the setUp method

import junit.framework.*;

public class PersonTest extends TestCase { private Person p;

public PersonTest(String name) { super(name);

}

public void testAddKgs() { p.addKgs(10);

assertEquals(10, p.getWeight());

}

protected void setUp() { p = new Person("Baby");

}

protected void tearDown() { }

public static Test suite() {

return new TestSuite(PersonTest.class);

}

public static void main(String args[]) {

String[] testCaseName = {PersonTest.class.getName()};

junit.textui.TestRunner.main(testCaseName);

} }

Figure 4: A sample JUnit test class.

in Figure 4 creates a new Person object, and assigns it to the test fixture variable p. Both methods can be omitted if they do nothing. JUnit automatically invokes the setUp and tearDown methods before and after each test method is executed (respectively).

The static method suite creates a test suite, i.e., a collection of test objects created from the test methods found in a subclass of TestCase. To run tests, JUnit first obtains a test suite by invoking the method suite, and then runs each test in the suite. Besides tests, a test suite can also contain several other test suites. Figure 4 uses Java’s reflection facility to create a test suite consisting of all the test methods of class PersonTest.

Our goal in automatically generating test oracle code was to preserve as much of this functionality as possible. In particular, it is important to run the user’s setUp and tearDown methods before and after each test. However, instead of writing test methods as methods with names beginning with

“test”, the automatically generated code makes very heavy use of the ability to create test suites from generated test objects.

4 Test Oracle Generation

This section presents the details of our approach to automatically generating JUnit tests from a JML-annotated Java class or interface. We will use the term type to mean either a class or an interface.

Our tool, jmlunit, produces two Java classes, in two files, for each JML-annotated Java type, C.

The first is named C JML TestData. It is a subclass of the JUnit framework class TestCase. The

C JML TestData class supplies the actual test data for the tests; more precisely, in the current tool,

users edit this class to supply the test data. The second is the class C JML Test, which is a test oracle

class; it holds the code for running the tests and for deciding test success or failure. For example, run-

ning our tool on the class Person produces two classes, a skeleton of the class Person JML TestData, and its subclass, Person JML Test. However, the file Person JML TestData.java, would not be generated if it already exists, otherwise the user’s test data might be lost.

In the rest of this section, we describe the contents of the class C JML Test generated by the tool.

The presentation is bottom-up. Subsection 4.1 describes how test outcomes are determined. Subsec- tion 4.2 describes the methods used to access test data, which allows its superclass, C JML TestData, to supply the test data used. (Users might want to skip to Section 5 after reading Subsection 4.2.) After that we discuss in detail the automatic generation of test objects, test methods, and test suites.

Section 5 describes the details of supplying test data in the C JML TestData class.

4.1 Deciding Test Outcomes

In JUnit, a test object implements the interface Test and thus has one test method, run(), which can be used to run a test consisting of several test methods. In our use of JUnit, each test method runs a single test case and tests a single method or constructor. Conceptually, a test case for an instance method consists of a pair of a receiver object (an instance of the class being tested) and a tuple of argument values; for testing static methods and constructors, a test case does not include the receiver object.

The outcome of a call to a method or constructor, M , for a given test case, is determined by whether the runtime assertion checker throws an exception during M ’s execution, and what kind of exception is thrown. If no exception is thrown, then the test case succeeds (assuming the call returns), because there was no assertion violation, and hence the call must have satisfied its specification.

Similarly, if the call to M for a given test case throws an exception that is not an assertion violation exception, then this also indicates that the call to M succeeded for this test case. Such exceptions are passed along by the runtime assertion checker because it is assumed to be transpar- ent. Hence if the call to M throws such an exception instead of an assertion violation exception, then the call must have satisfied M ’s specification, specifically, its exceptional postcondition. With JUnit, such exceptions must, however, be caught by the test method, since any such exceptions are interpreted by the framework as signaling test failure. Hence, the test method must catch and ignore all exceptions that are not assertion violation exceptions.

3If the call to M for a test case throws an assertion violation exception, however, things become interesting. If the assertion violation exception is not a precondition exception, then the method M is considered to fail that test case.

However, we have to be careful with the treatment of precondition violations. A precondition is an obligation that the client must satisfy; nothing else in the specification is guaranteed if the precondition is violated. Therefore, when a test method calls a method M and M ’s precondition does not hold, we do not consider that to be a test failure; rather, when M signals a precondition exception, it indicates that the given test input is outside M ’s domain, and thus is inappropriate for test execution. We call the outcome of such a test execution “meaningless” instead of calling it either a success or failure. On the other hand, precondition violations that arise inside the execution of M should still be considered to be test failures. To do this, we distinguish two kinds of precondition violations that may occur when a test method runs M on a test case, say tc:

• The precondition of M fails for tc, which indicates, as above, that the test case tc is outside M ’s domain. As noted earlier, this is called an entry precondition violation.

3Catching and ignoring exceptions does interact with some of JML’s syntactic sugars, however. We found that if the user does not specify an exceptional postcondition, it seems best to treat exceptions as if they were assertion violations, instead of using a default that allows them to be treated as test successes. The JML compiler (jmlc) has an option (-U) to compile files with this behavior.

• A method N called from within M ’s body signals a precondition violation, which indicates that M ’s body did not meet N ’s precondition, and thus that M failed to correctly implement its specification on the test case tc. (Note that if M calls itself recursively, then N may be the same as M .) Such an assertion violation is an internal precondition violation.

The JML runtime assertion checker converts the second kind of precondition violation into an internal precondition violation exception. Thus, a test method decides that M fails on a test case tc if M throws an internal precondition violation exception, but rejects the test case tc as meaningless if it throws an entry precondition violation exception. This treatment of precondition exceptions was the main change that we had to make to the JML’s existing runtime assertion checker to implement our approach. The treatment of meaningless test case executions is also the only place where we had to extend the JUnit testing framework.

Our implementation of this decision process is shown in the method runTest in Figure 5. The user is informed of what is happening during the testing process by the JUnit framework’s use of the observer pattern. Most of this is done by the JUnit framework, but the call to the addMeaningless method also informs listeners of what is happening during testing (see Section 4.4). The doCall method is an abstract method that, when overridden in a particular test object, will call the method being tested with the appropriate arguments for that test case. The test’s outcome is recorded as discussed above. There are only two tricky aspects to this. The first is adjusting the assertion failure error object so that the information it contains is of maximum utility to the user. This is accomplished by deleting the stack backtrace that would show only the test harness methods, which happens in the call to the setStackTrace method, and adding the original exception as a “cause”

of this error. The second tricky aspect that the runtime assertion checker must be turned off when indicating test failure, and turned back on again after that is done. This is needed because the report about the problem can call toString methods of the various receiver and argument objects without finding the same violations (for example, invariant violations) that caused the original test failure.

To summarize, the outcome of a test execution is “failure” if an assertion violation exception other than an entry precondition violation is thrown, is “meaningless” if an entry precondition violation is thrown, and “success” otherwise.

4.2 Test Data Access

In this section we describe the interface that a C JML Test class uses to access user-provided test data. Recall that this test data for testing a class C is defined in a class named C JML TestData that is a superclass of C JML Test; thus the interface described in this subsection describes how these classes communicate. In this section we describe only that interface; the ways that the user can implement it in the C JML TestData class are described in Section 5.

Test data provided in the C JML TestData class are used to construct receiver objects and argument objects that form test cases for testing various methods. For example, a test case for the method addKgs of class Person (see Figure 1) requires one object of type Person and one value of type int. The first object will be the receiver of the message addKgs, and the second will be the argument. In our approach, there is no need to construct expected outputs, because success or failure is determined by observing the runtime assertion checker, not by comparing results to expected outputs.

What interface is used to access the test data needed to build test cases for testing a method M ? There are several possibilities:

• Separate test data. For each method M to be tested, there is a separate set of test data.

This results in a very flexible and customizable configuration. However, defining such fixture

variables becomes complicated and requires more work from the user.

public void runTest() throws Throwable { try {

// The call being tested!

doCall();

}

catch (JMLEntryPreconditionError e) { // meaningless test input

addMeaningless();

} catch (JMLAssertionError e) { // test failure

int l = JMLChecker.getLevel();

JMLChecker.setLevel(JMLOption.NONE);

try {

String failmsg = this.failMessage(e);

AssertionFailedError err = new AssertionFailedError(failmsg);

err.setStackTrace(new StackTraceElement[]{});

err.initCause(e);

result.addFailure(this, err);

} finally {

JMLChecker.setLevel(l);

}

} catch (Throwable e) { // test success }

}

Figure 5: Template method abstraction of running a test case, showing how failure, success, and meaningless tests are decided.

• Global test data. All test methods share the same set of test data. The approach is simple and intuitive, and thus defining the test data requires less work from the user. However, the user has less control in that, because of shared test data, it becomes hard to supply specific test cases for testing specific methods.

• Combination. Some combination of the above two approaches, which has a simple and intuitive test fixture configuration, and yet to gives the user more control.

Our earlier work [15] adopted the global test data approach. The rationale was that the more test cases would be the better and the simplicity of use would outweigh the benefit of more control.

There would be no harm to run test methods with test cases of other methods (when these are type-compatible). Some of test cases might violate the precondition; however, entry precondition violations are not treated as test failures, and so such test cases cause no problems.

However, our experience showed that more control was necessary, in particular to make it possible to produce clones of test data so that test executions do not interfere with each other.

4Thus a combination approach is used in the current implementation.

In the combination approach we have adopted, there is an abstract interface between the test driver and the user-supplied C JML TestData class that defines the test data.

5The exact in- terface used depends on whether the type of data, T , is a reference type or a primitive value

4Also, in our earlier implementation, all of the test fixture variables were reinitialized for each call to the method under test, which resulted in very slow testing; most of this reinitialization was unnecessary.

5We thank David Cok for pointing out problems with the earlier approach and discussing such extensions.

/** Return a new, freshly allocated indefinite iterator that

* produces test data of type T. */

public IndefiniteIterator vT Iter(String methodName, int loopsThisSurrounds);

Figure 6: Methods used for accessing test data of a reference type T by the test oracle class.

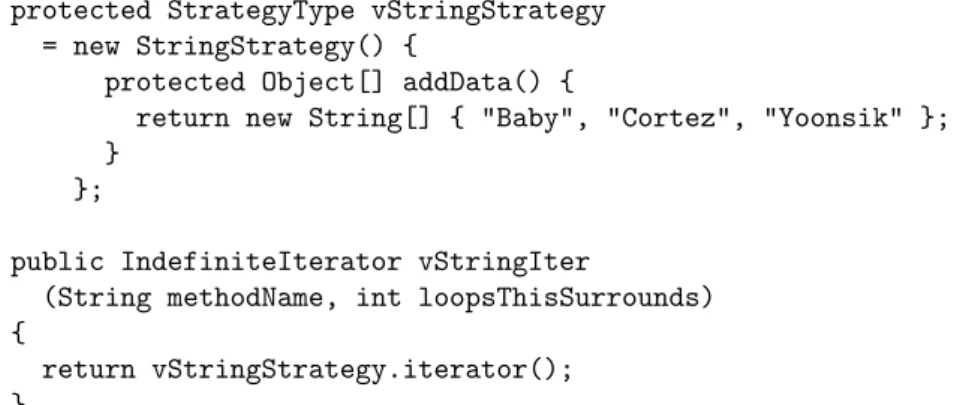

type. For a reference type T , the user must define the method shown in Figure 6. The type IndefiniteIterator is a special iterator type for supplying test data, and is defined in a package org.jmlspecs.jmlunit.strategies. Iterators are convenient for supplying test data because they are easy to combine, filter, etc. The indefinite iterator interface is shown in Figure 7.

For primitive value types, such as int, short, float char, and boolean, the interface used is slightly different, since boxing primitive values into objects, and unboxing them later, would be inefficient. Thus, for example, to access data of type int, the drivers require the user to define the method shown in Figure 8. The type intIterator is an extension of IndefiniteIterator that provides direct access to the values of type int, using the method getInt() instead of get().

To give the user control over what test data is used to test what methods and for which arguments of those methods, the test data access method in Figure 6 has two arguments. The first argument, the string methodName, gives the name of the method being tested; this allows different data to be used for different test methods. For example, the user can avoid test data that would cause a time-consuming method to run for a long time by using different test data for just that method. The second argument, loopsThisSurrounds, allows the program to distinguish between data generated for different arguments of a method that takes multiple arguments of a given type. It can also be used to help control the number of tests executed. The user that does not want such control simply ignores one or both of these arguments.

To let these test data access methods be shared by all test methods in the test oracle class, we adopt a simple naming convention. Each method’s suffix is the name of its type prefixed by the character ‘v’, e.g., vint for type int.

6If C is a class to be tested and T

1, T

2, . . . , T

nare the set of other formal parameter types of all the methods to be tested in the class C, then the test data access methods need to be provided for each distinct type in C, T

1, . . ., T

n.

The test cases used are, in general, the cross product of the set of test data supplied for each type.

However, to give the user more control over the test cases, the factory methods shown in Figure 9 is called to create the test suites that are used both to accumulate all the tests and the tests for each method M . The user can override the emptyTestSuiteFor method to provide a test suite that, for example, limits the number of tests run for each method to 10. By overriding the overallTestSuite method, one can, for example, use only 70% of the available tests. Users not wanting such control can simply use the defaults provided, as shown in Figure 9, which use all available tests.

For example, for the class Person, the programmer will have to define the test data access methods shown in Figure 10. These are needed because Person’s methods have receivers of type Person, and take arguments of types String (in the constructor) and int (in the constructor and in the method addKgs).

The set of test cases for the method addKgs is thus all tuples of the form (r, i) where r is a non-null object returned by vPersonIter("addKgs",1), and i is an element returned by vintIter("addKgs", 1-1). The second argument, loopsThisSurrounds, is used to distinguish between data generated for different arguments of a method that takes multiple arguments of a given type. Similarly, the set of test cases for the method getWeight consists of all tuples of the form (r) consisting of a non-null object r returned by vPersonIter("getWeight",0).

6For an array type, the character$is used to denote its dimension, e.g.,vint $ $Iterforint[][]. Also to avoid name clashes, thejmlunittool uses fully qualified names for reference types; for example, instead of vStringIter, the actual declaration would be for a method namedvjava lang StringIter. To save space, we do not show the fully qualified names.

package org.jmlspecs.jmlunit.strategies;

import java.util.NoSuchElementException;

/** Indefinite iterators. These can also be thought of as cursors

* that point into a sequence. */

public interface IndefiniteIterator extends Cloneable {

/** Is this iterator at its end? I.e., would get() not work? */

/*@ pure @*/ boolean atEnd();

/** Return the current element in this iteration. This method may

* be called multiple times, and does not advance the state of the

* iterator when it is called. The idea is to permit several

* similar copies to be returned (e.g., clones) each time it is

* called.

* @throws NoSuchElementException, when atEnd() is true.

*/

/*@ public behavior

@ assignable \nothing;

@ ensures_redundantly atEnd() == \old(atEnd());

@ signals (Exception e) e instanceof NoSuchElementException && atEnd();

@*/

/*@ pure @*/ Object get();

/** Advance the state of this iteration to the next position.

* Note that this never throws an exception. */

/*@ public normal_behavior

@ assignable objectState;

@*/

void advance();

/** Return a new iterator with the same state as this. */

/*@ also

@ public normal_behavior

@ assignable \nothing;

@ ensures \fresh(\result) && \result instanceof IndefiniteIterator;

@*/

/*@ pure @*/ Object clone();

}

Figure 7: The indefinite iterator interface.

/** Return a new, freshly allocated indefinite iterator that

* produces test data of type int. */

public intIterator vintIter(String methodName, int loopsThisSurrounds);

Figure 8: Methods used for accessing test data of the primitive value type int by the test oracle

class.

/** Return the overall test suite for testing; the result

* holds every test that will be run.

*/

public TestSuite overallTestSuite() {

return new TestSuite("Overall test suite");

}

/** Return an empty test suite for accumulating tests for the

* named method.

*/

public TestSuite emptyTestSuiteFor(String methodName) { return new TestSuite(methodName);

}

Figure 9: Factory methods used for producing test suites, with their default implementations.

public IndefiniteIterator vPersonIter(String methodName, int loopsThisSurrounds);

public IndefiniteIterator vStringIter(String methodName, int loopsThisSurrounds);

public IntIterator vintIter(String methodName, int loopsThisSurrounds);

Figure 10: Test data access methods the programmer must define for testing the class Person.

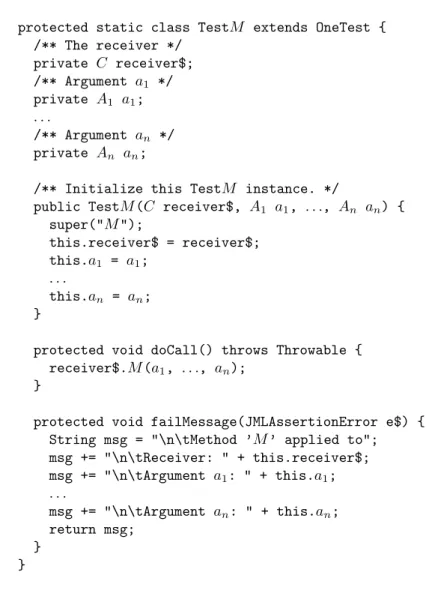

4.3 Test Objects

For each test case, a test object is automatically created to hold the test case’s data and run the corresponding test method. These test objects are created in the C JML Test class. Their creation starts with a call to the suite() method of that class. As shown in Figure 11, this method accumulates test objects into the test suite created by the overallTestSuite() factory method described earlier. The addTestSuite() method is used to add a test suite consisting of all the custom testing methods, named testX , that the user may have defined.

7Then test suites consisting of test objects for testing each method are also added to this test suite. It is the generation of these test suites, one for each method M to be tested, that will concern us in the remainder of this subsection.

To describe our implementation, let C be a Java type annotated with a JML specification and C JML Test the JUnit test class generated from the type C. Recall that C JML Test is a subclass of C JML TestData. For each instance (i.e., non-static) method of the type C with a signature involving only reference types (where n ≥ 0 and m ≥ 0):

T M (A

1a

1,. . ., A

na

n) throws E

1,..., E

m;

the method shown in Figure 13 is used to generate the test objects and add them to the corresponding test suite. It appears, once for each such method M , in the body of the test class.

The nested for loops in Figure 13 loop over all test cases for method M . Each loop iterates over all test inputs that correspond to the receiver or a formal parameter. The iterators used in the loop are created by calling the vCIter or vA

iIter methods. The vCIter iterator, which is used to pro- vide the receivers, is filtered to take out null elements, using the class NonNullIteratorDecorator.

Each iterator used is checked to see that it is not already at its end. This is done to ensure that there is some testing to be done for the method M . If one of the loops had no test data, then no testing would be done for that method.

7Such methods may be written by the user as usual in JUnit.

public static Test suite() {

C_JML_Test testobj = new C_JML_Test("C_JML_Test");

TestSuite testsuite = testobj.overallTestSuite();

// Add instances of Test found by the reflection mechanism.

testsuite.addTestSuite(C_JML_Test.class);

testobj.addTestSuiteForEachMethod(testsuite);

return testsuite;

}

public void addTestSuiteForEachMethod(TestSuite overallTestSuite$) { h A copy of the code from Figure 12 for each method and constructor M i }

Figure 11: The method suite and addTestSuiteForEachMethod. These are part of the C JML Test class used to test type C.

this.addTestSuiteFor$TestM (overallTestSuite$);

Figure 12: The call to the addTestSuiteFor$TestM for adding tests for the method or constructor M .

The test data in the test case, is passed to the constructor of a specially created, nested subclass, TestM ;

8this constructor stores the data away for the call to the test method. The test object thus created is added to the method’s test suite. If the method’s test suite is full, then this exception causes the loops to terminate early, saving whatever work is needed to generate the test data. At the end of Figure 13, the method’s test suite is added to the overall test suite.

Figure 13 shows the code generated when all of the argument types, A

iare reference types. If some of the types A

iare primitive value types, then the appropriate iterator type is used instead of IndefiniteIterator in Figure 13, and instead of calling get(), the argument is obtained by calling the getA

i() method appropriate for the type A

i.

If the method being tested is a static method, then the outermost while loop in Figure 13 is omitted, since there is no need for a receiver object. Constructors (for non-abstract classes) are tested in a similar way.

By default, the jmlunit tool only generates test methods for public methods in the class or interface being tested. It is impossible to test private methods from outside the class, but users can tell the tool whether they would like to also test protected and package visible methods. Normally, the tool only tests methods for which there is code written in the class being tested. However, the tool also has an option to test inherited methods that are not overridden. This is useful because in JML one can add specifications to a method without writing code for it; this can happen, for example, if one inherits specifications through an interface for a method inherited from a superclass.

Also, test methods are not generated for a static public void method named main; testing such a main method seems inappropriate for unit testing.

Figure 14 is an example of the code used to generate test objects for the testing of the addKgs method of the class Person.

8In the implementation, the initial character ofM is capitalized inTestM, e.g.,TestAddKgs; this follows the Java conventions for class names.